OpenHuEval: Evaluating Large Language Model on Hungarian Specifics

Comparison of related benchmarks.

| Benchmark | Real User Query | Self-awareness Evaluation | Proverb Reasoning | Generative Task & LLM-as-Judge | Hungarian Lang | Comprehensive Hu-specific |

|---|---|---|---|---|---|---|

| WildBench | ✔ | ✘ | ✘ | ✔ | ✘ | ✘ |

| SimpleQA, ChineseSimpleQA | ✘ | ✔ | ✘ | ✔ | ✘ | ✘ |

| MAPS | ✘ | ✘ | ✔ | ✘ | ✘ | ✘ |

| MARC, MMMLU et al. | ✘ | ✘ | ✘ | ✘ | ✔ | ✘ |

| BenchMAX | ✘ | ✘ | ✘ | ✔ | ✔ | ✘ |

| MILQA | ✘ | ✘ | ✘ | ✘ | ✔ | ✘ |

| HuLU | ✘ | ✘ | ✘ | ✘ | ✔ | ✘ |

| OpenHuEval (ours) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

OpenHuEval

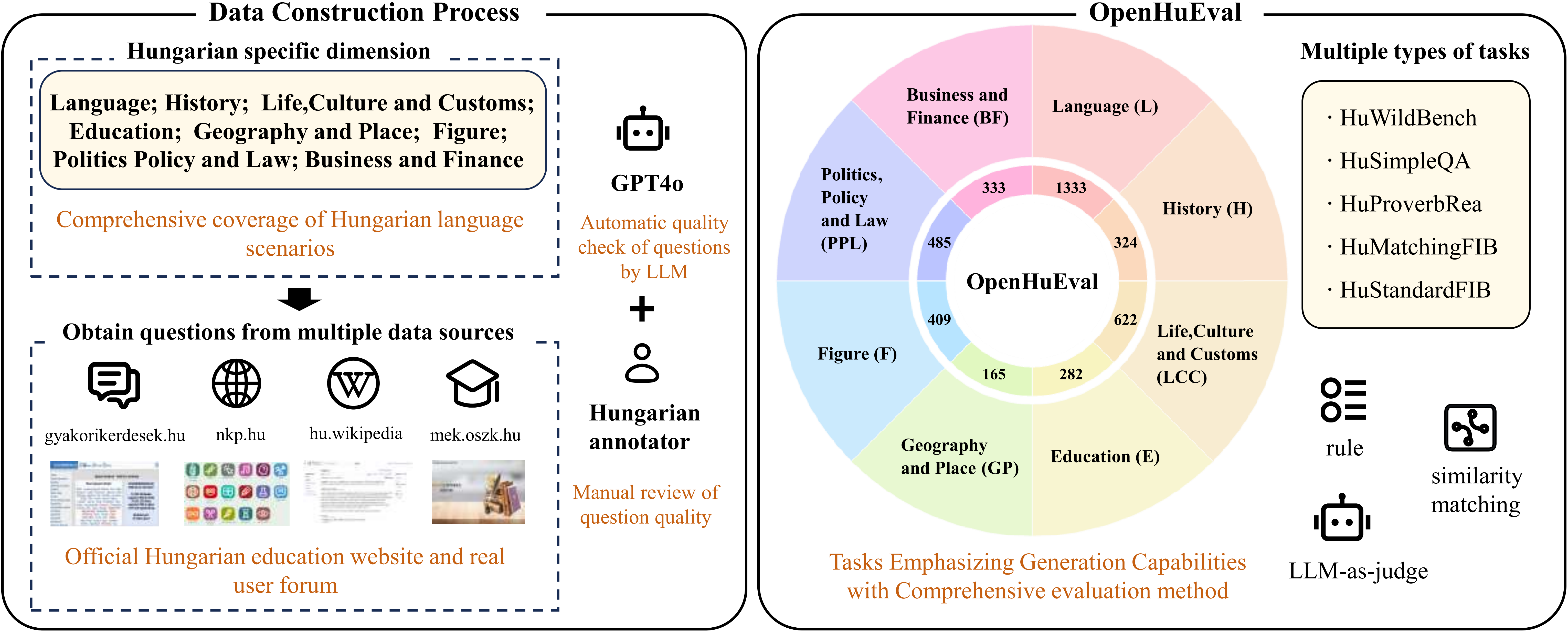

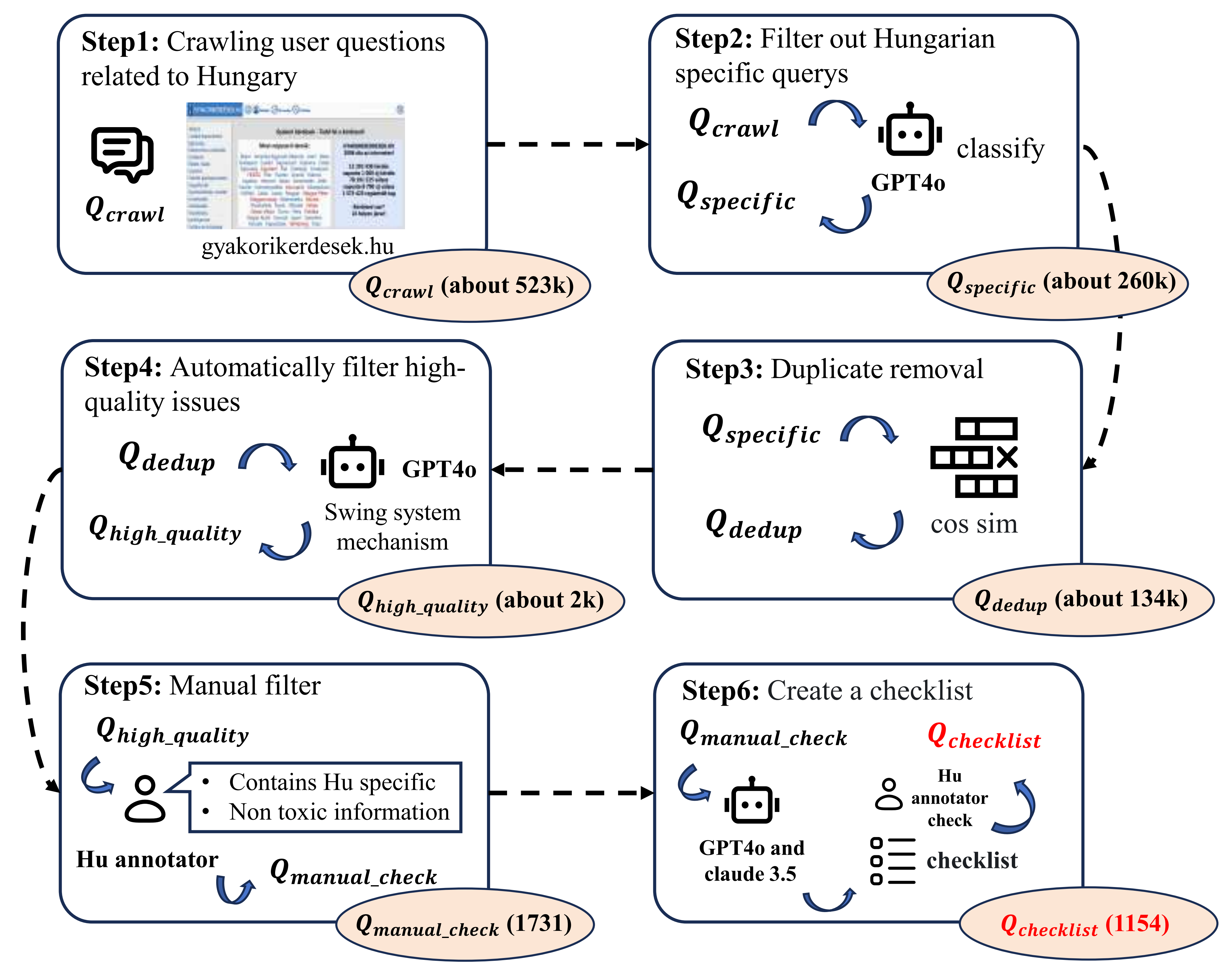

We introduce OpenHuEval, the first benchmark for LLMs focusing on the Hungarian language and specifics. OpenHuEval is constructed from a vast collection of Hungarian-specific materials sourced from multiple origins.In the construction, we incorporated the latest design principles for evaluating LLMs, such as using real user queries from the internet, emphasizing the assessment of LLMs' generative capabilities, and employing LLM-as-judge to enhance the multidimensionality and accuracy of evaluations. Consequently, OpenHuEval provides the comprehensive, in-depth, and scientifically accurate assessment of LLM performance in the context of the Hungarian language and its specifics. We evaluated current mainstream LLMs, including both traditional LLMs and recently developed Large Reasoning Models. The results demonstrate the significant necessity for evaluation and model optimization tailored to the Hungarian language and specifics. We also established the framework for analyzing the thinking processes of LRMs with OpenHuEval, revealing intrinsic patterns and mechanisms of these models in non-English languages, with Hungarian serving as a representative example.

Construction OpenHuEval

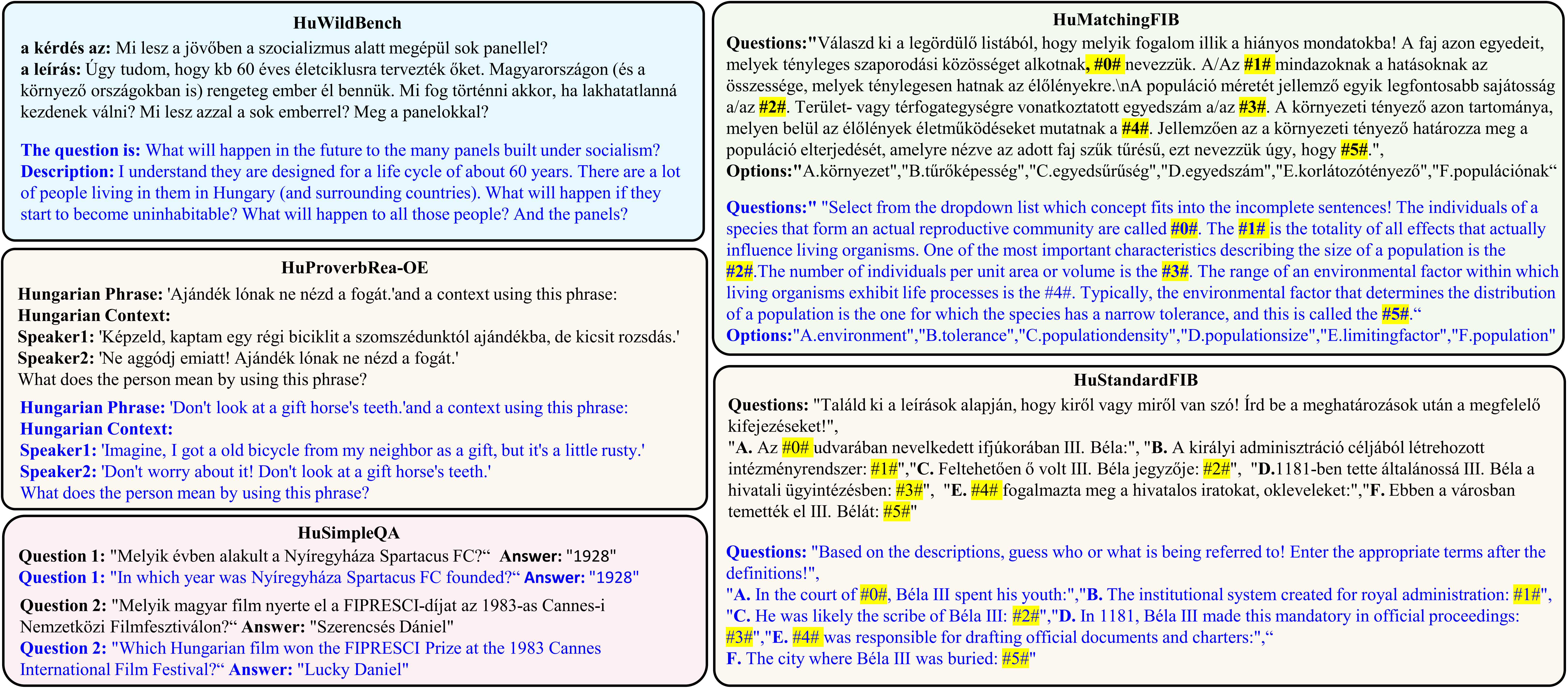

Examples of OpenHuEval

Data statistics of Openhueval

The Hungarian-Specific Dimensions (HuSpecificDim).

| HuSpecificDim | Definition | #Question |

|---|---|---|

| Language(𝓛) | Basic knowledge of the Hungarian language and Hungarian proverbs and sayings | 1333 |

| History(𝓗) | Historical events and historical development of Hungary | 324 |

| Life, Culture, and Custom(𝓛𝓒𝓒) | Religion, rituals, culture, holidays, and the daily life of Hungarians | 622 |

| Education and Profession(𝓔𝓟) | Education system in Hungary and related professions | 282 |

| Geography and Place(𝓖𝓟) | Geographical knowledge of Hungary, cities, and locations | 165 |

| Figure(𝓕) | Famous figures of Hungary | 409 |

| Politics, Policy, and Law(𝓟𝓟𝓛) | Politics, policies, and laws of Hungary | 485 |

| Business and Finance(𝓑𝓕) | Business and finance in Hungary | 333 |

Tasks of OpenHuEval

| Task | HuSpecificDim | Judge | Question Type | #Question |

|---|---|---|---|---|

| HuWildBench | 𝓛𝓒𝓒, 𝓔𝓟, 𝓟𝓟𝓛, 𝓑𝓕 | llm, checklist | OE | 1154 |

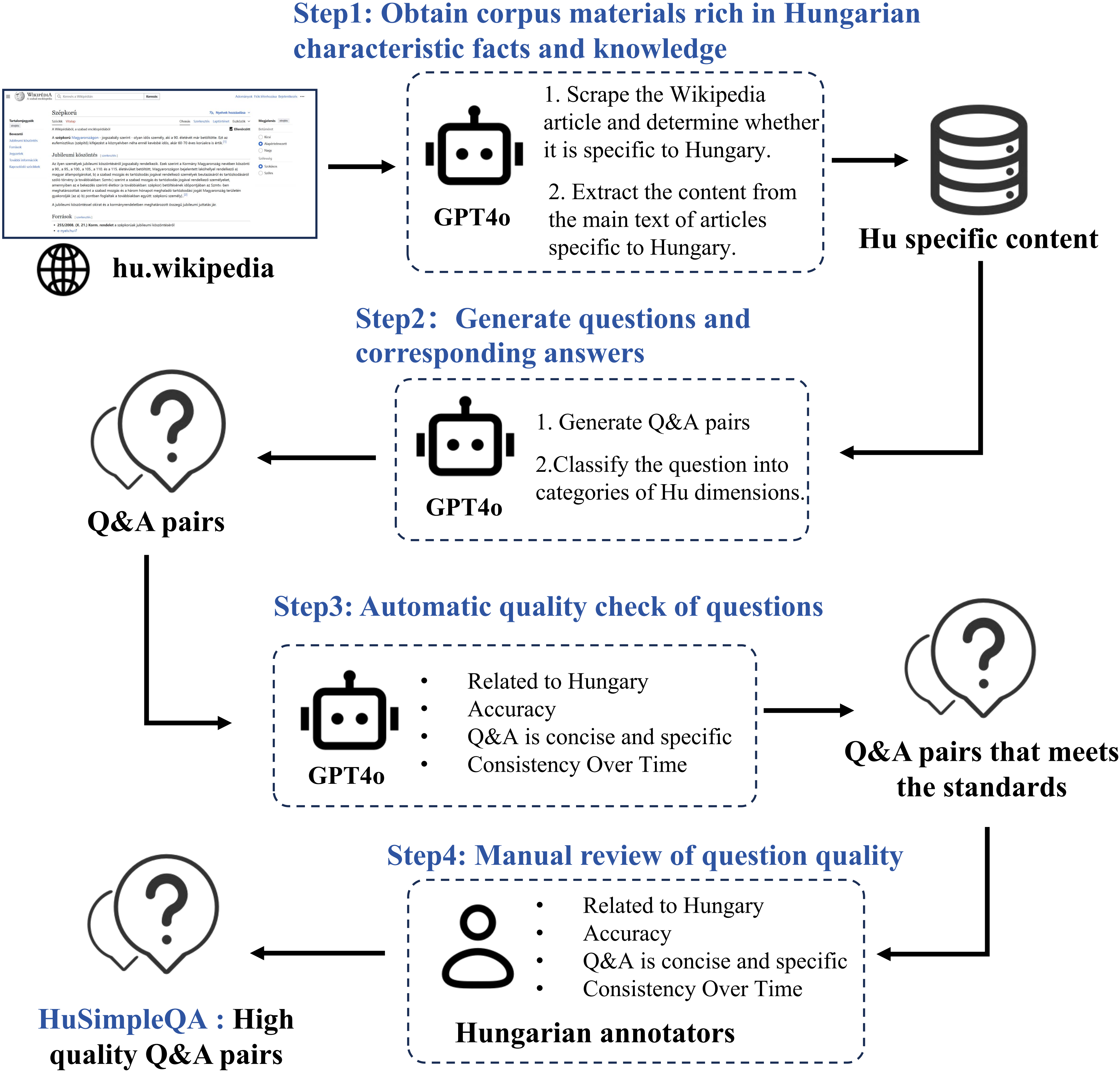

| HuSimpleQA | 𝓛, 𝓗, 𝓛𝓒𝓒, 𝓔𝓟, 𝓖𝓟, 𝓕, 𝓟𝓟𝓛, 𝓑𝓕 | llm | OE | 1293 |

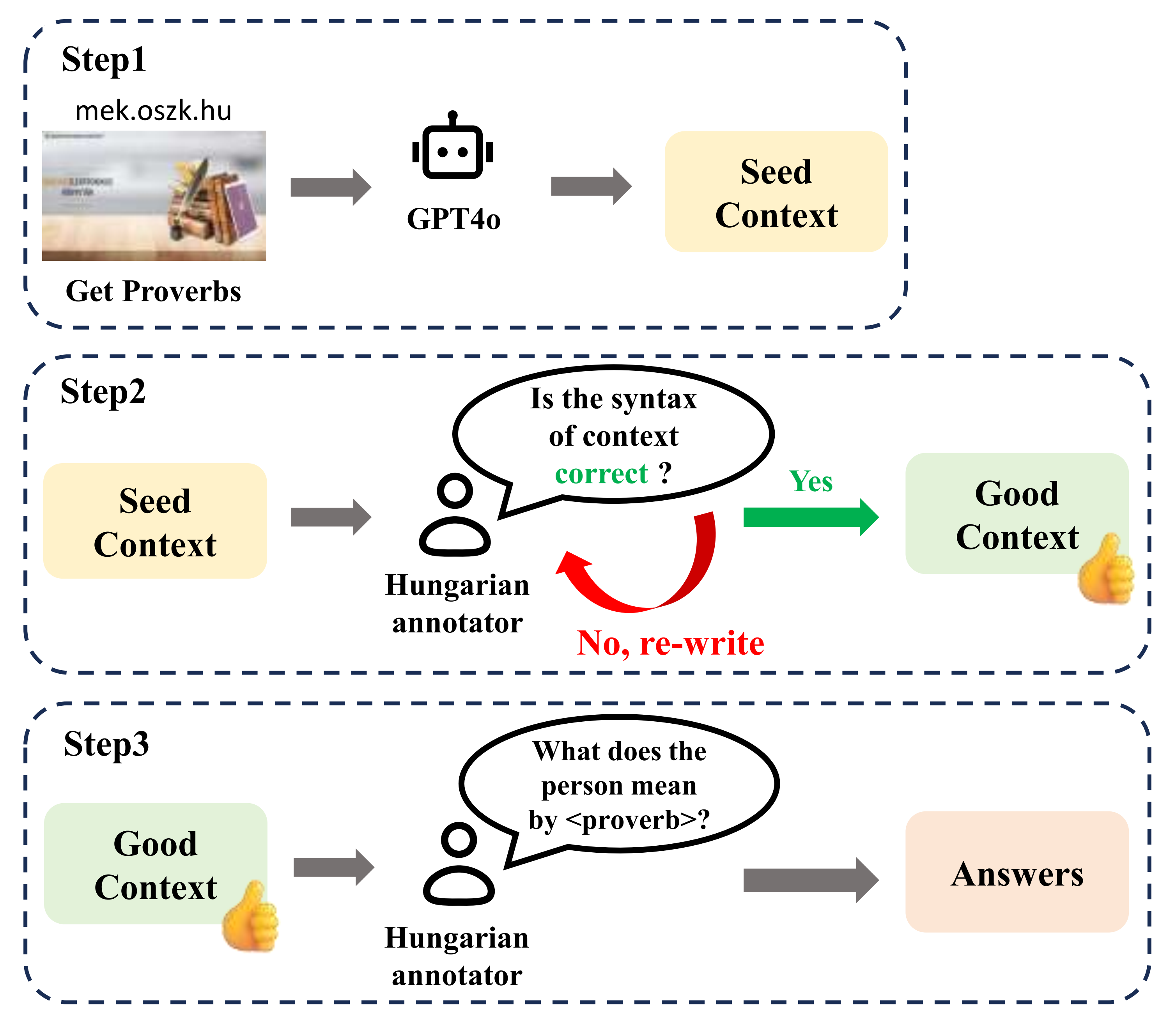

| HuProverbRea | 𝓛 | rule, llm | 2CQ/OE | 1135 |

| HuMatchingFIB | 𝓛, 𝓗 | rule | Matching Filling-in-Blank | 278 |

| HuStandardFIB | 𝓛, 𝓗 | rule, similarity matching | Standard Filling-in-Blank | 93 |

🖊️ Citation

@misc{yang2025openhuevalevaluatinglargelanguage,

title={OpenHuEval: Evaluating Large Language Model on Hungarian Specifics},

author={Haote Yang and Xingjian Wei and Jiang Wu and Noémi Ligeti-Nagy and Jiaxing Sun and Yinfan Wang and Zijian Győző Yang and Junyuan Gao and Jingchao Wang and Bowen Jiang and Shasha Wang and Nanjun Yu and Zihao Zhang and Shixin Hong and Hongwei Liu and Wei Li and Songyang Zhang and Dahua Lin and Lijun Wu and Gábor Prószéky and Conghui He},

year={2025},

eprint={2503.21500},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2503.21500},

}