Benchmarking Chinese Commonsense Reasoning of LLMs: From

Chinese-Specifics to Reasoning-Memorization Correlations.

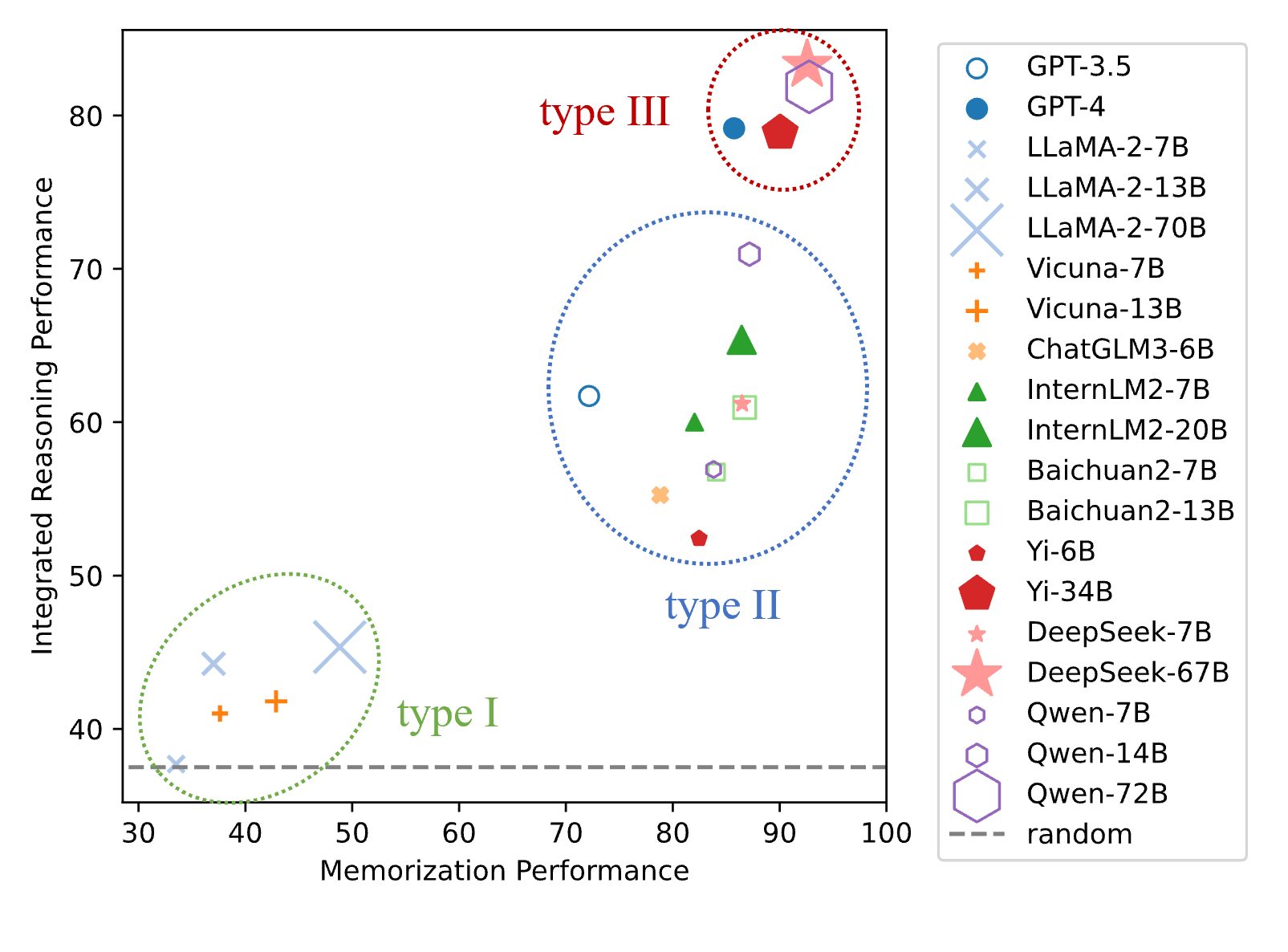

💡 This page will showcase some conclusions and findings based on CHARM, including Prompt Strategy, Integrated Reasoning vs Memorization, and Memorization-Independent Reasoning.

Prompt Strategy

We selected 5 commonly used prompt strategies, and assessed the performance of the 19 LLMs on CHARM reasoning task. We evaluated the currently commonly used LLMs, which can be divided into two categories:

(1) 7 English LLMs, including GPT-3.5, GPT4, LLaMA-2 (7B,13B,70B), and Vicuna (7B,13B).(2) 12 Chinese-oriented LLMs, including ChatGLM3 (6B), Baichuan2 (7B,13B), InternLM2 (7B,20B), Yi (6B,34B), DeepSeek (7B,67B) and Qwen (7B,14B,72B).

Examples of prompt strategies

| Prompt Strategy | Description | Example |

|---|---|---|

| Direct | The LLM does not perform intermediate reasoning and directly predicts the answer. | Q:以下陈述是否包含时代错误,请选择正确选项。一个接受了义务教育、具备基本常识的人会如何选择?李白用钢笔写诗。 选项:(A) 是 (B) 否 A:(A) |

| ZH-CoT | The LLM conducts intermediate reasoning in Chinese before producing the answer. | Q:以下陈述是否包含时代错误,请选择正确选项。一个接受了义务教育、具备基本常识的人会如何选择?李白用钢笔写诗。 选项:(A) 是 (B) 否 A:让我们一步一步来思考。这个陈述提到了“李白”,他是中国唐朝时期的诗人。而陈述中提到的“钢笔”是现代设备,因此李白不可能使用钢笔写诗,该陈述包含时代错误。所以答案是(A)。 |

| EN-CoT | The reasoning process of CoT is in English for the Chinese questions. | Q:以下陈述是否包含时代错误,请选择正确选项。一个接受了义务教育、具备基本常识的人会如何选择?李白用钢笔写诗。 选项:(A) 是 (B) 否 A:Let's think step by step.This statement mentions "Li Bai", a poet from the Tang Dynasty in China. The "pen" mentioned in the statement is a modern device, so it is impossible for Li Bai to write poetry with a pen. This statement contains errors from the times. So the answer is (A). |

| Translate-EN | We used the DeepL api to translate our benchmark into English, and then used English CoT for reasoning. | Q: Choose the correct option if the following statement contains an anachronism. How would a person

with compulsory education and basic common sense choose?Li Bai wrote poetry with a fountain

pen. Options:(A) Yes (B) No A: Let's think step by step.The statement mentions "Li Bai", a Chinese poet from the Tang Dynasty. The "fountain pen" mentioned in the statement is a modern device, so Li Bai could not have used a fountain pen to write his poems, and the statement contains an anachronism. The answer is (A). |

| XLT | The template prompt XLT was used to change the original question into an English request, solve it step by step, and finally format the answer for output. | I want you to act as a commonsense reasoning expert for

Chinese.Request:以下陈述是否包含时代错误,请选择正确选项。一个接受了义务教育、具备基本常识的人会如何选择?李白用钢笔写诗。选项:(A) 是 (B) 否 You should retell the request in English. You should do the answer step by step to choose the right answer.You should step-by-step answer the request. You should tell me the answer in this format 'So the answer is'. Request: How would a typical person answer each of the following statements whether it contains an anachronism? Li Bai writes poetry with a pen. Option:(A) Yes (B) No Step-by-step answer: 1.This statement mentions "Li Bai", a poet from the Tang Dynasty in China. 2.The pen mentioned in the statement is a modern device. 3. so, it is impossible for Li Bai to write poetry with a pen. This statement contains errors from the times. So the answer is (A). |

We tested the combinations of the 19 LLMs and the 5 prompt strategies in CHARM reasoning tasks. The results are shown in the table below.

Averaged accuracy on CHARM reasoning tasks

| Prompt | Avg. all LLMs | Avg. CN-LLMs | Avg. EN-LLMs | |

|---|---|---|---|---|

| Avg. all domains | Direct | 46.28 | 48.41 | 42.64 |

| ZH-CoT | 56.66 | 62.40 | 46.81 | |

| EN-CoT | 54.46 | 58.19 | 48.06 | |

| Translate-EN | 53.88 | 55.51 | 51.07 | |

| XLT | 56.81 | 59.09 | 52.90 | |

| Avg. Chinese domain | Direct | 45.43 | 47.76 | 41.44 |

| ZH-CoT | 56.35 | 62.23 | 46.26 | |

| EN-CoT | 52.06 | 56.36 | 44.68 | |

| Translate-EN | 47.25 | 47.82 | 46.27 | |

| XLT | 53.80 | 56.63 | 48.96 | |

| Avg. global domain | Direct | 47.13 | 49.05 | 43.85 |

| ZH-CoT | 56.96 | 62.57 | 47.35 | |

| EN-CoT | 56.85 | 60.01 | 51.44 | |

| Translate-EN | 60.50 | 63.20 | 55.87 | |

| XLT | 59.82 | 61.56 | 56.84 |

💡 Results showed that LLMs' orientation and the task's domain affect prompt strategy performance, which enriches previous research findings.

From the LLM dimension, it's clear that various LLMs prefer different prompt strategies: XLT consistently excels for English LLMs among the 5 strategies, while for Chinese-oriented LLMs, despite some complexity, ZH-CoT generally performs best.

From the commonsense domain dimension, strategies that use English for reasoning (like XLT, Translate-EN, etc.) are suitable for the global domain; however, ZH-CoT generally performs better in the Chinese domain.

The conclusion here differs from previous studies (Huang et al., 2023a, Zhang et al., 2023a, Shi et al., 2022), which suggested that employing English for non-English reasoning tasks was more effective than using the native language.

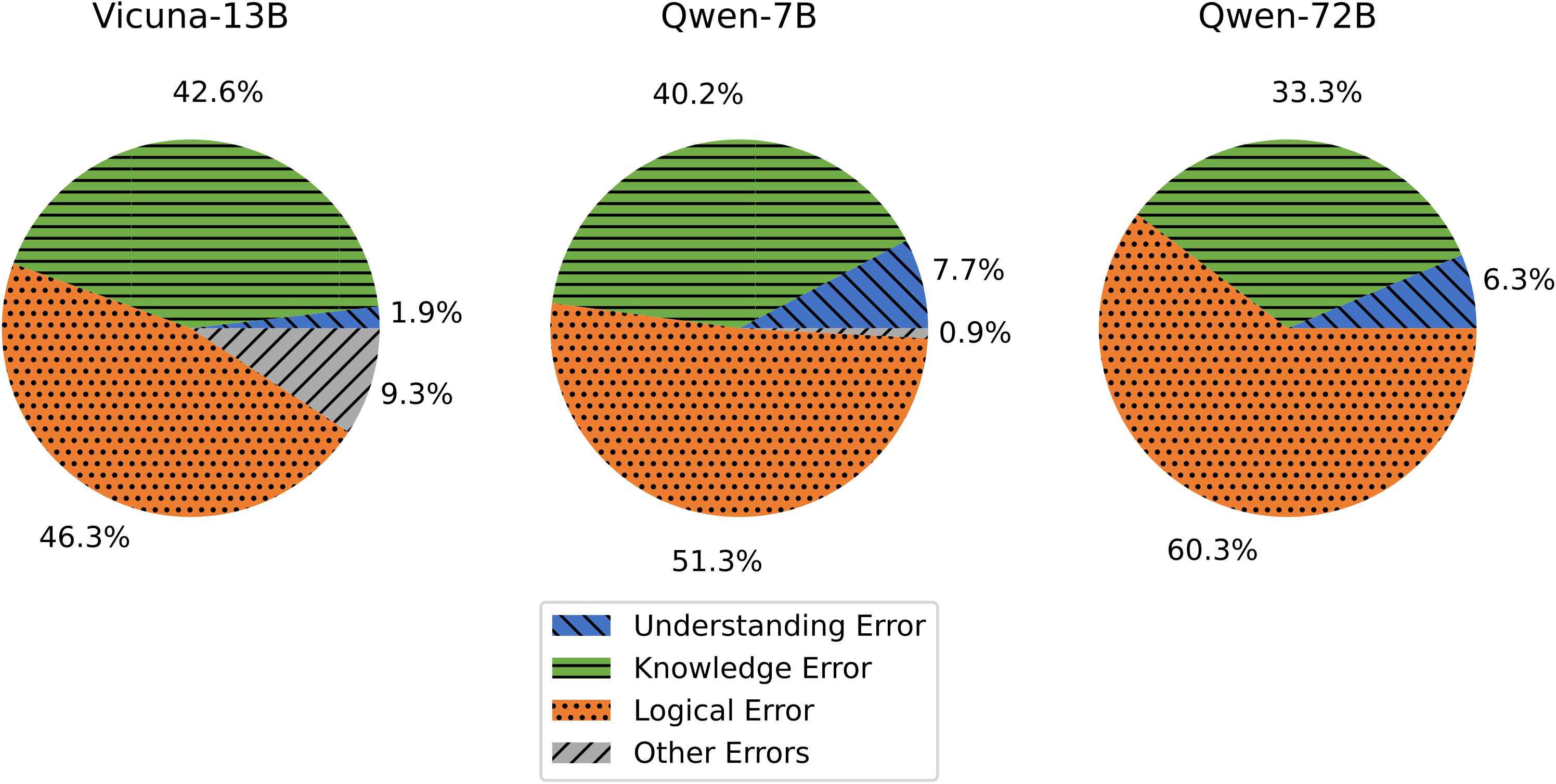

💡 If Language Models (LLMs) provide incorrect responses to retained reasoning questions, these can be termed as memorization-independent reasoning errors. We analyzed these errors by manually reviewing the reasoning process and categorized them into four main types:

- Understanding Error: In this case, the LLM was unable to accurately comprehend the question, including misunderstanding the content, ignoring or even modifying important information in the premise, and failing to grasp the core query of the question.

- Knowledge Error: The LLM incorporated inaccurate knowledge during the reasoning process. It's important to highlight that the knowledge pieces related to the reasoning question were previously examined in the related memorization questions, which the LLM answered correctly. However, the LLM output incorrect information during the reasoning phase.

- Logical Error: The LLM made logical reasoning errors, such as mathematical reasoning errors, inability to reach the correct conclusion based on sufficient information, or reaching the correct conclusion but outputting the wrong option.

- Other Errors: These are other scattered, relatively rare types of errors.

💡 Distribution of the Memorization-Independent Reasoning Errors

Istribution of the memorization-independent reasoning errors

💡 Examples of the 3 types of memorization-independent reasoning errors of LLMs

Examples of the 3 types of memorization-independent reasoning errors of LLMs.

🖊️ Citation

@misc{sun2024benchmarking,

title={Benchmarking Chinese Commonsense Reasoning of LLMs: From Chinese-Specifics to Reasoning-Memorization Correlations},

author={Jiaxing Sun and Weiquan Huang and Jiang Wu and Chenya Gu and Wei Li and Songyang Zhang and Hang Yan and Conghui He},

year={2024},

eprint={2403.14112},

archivePrefix={arXiv},

primaryClass={cs.CL}

}