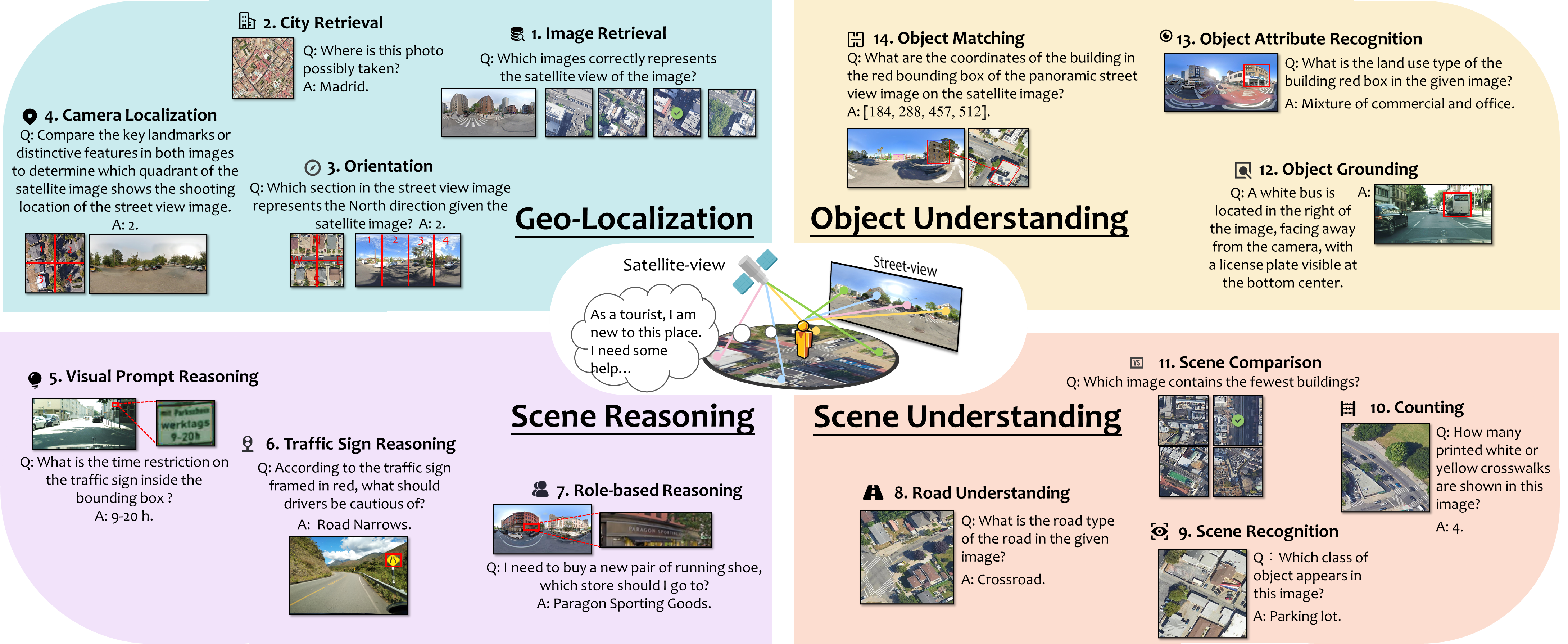

UrBench Overview

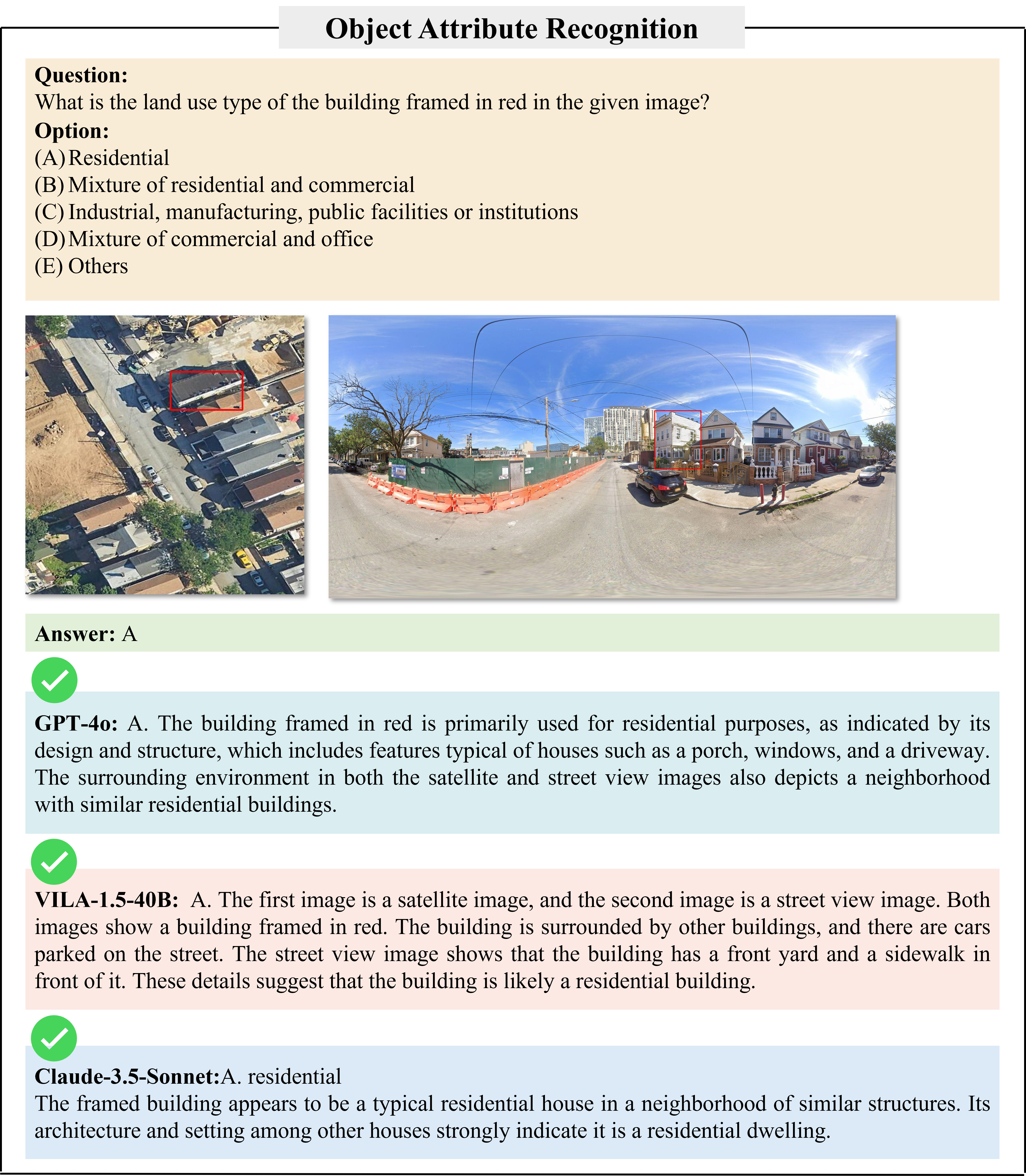

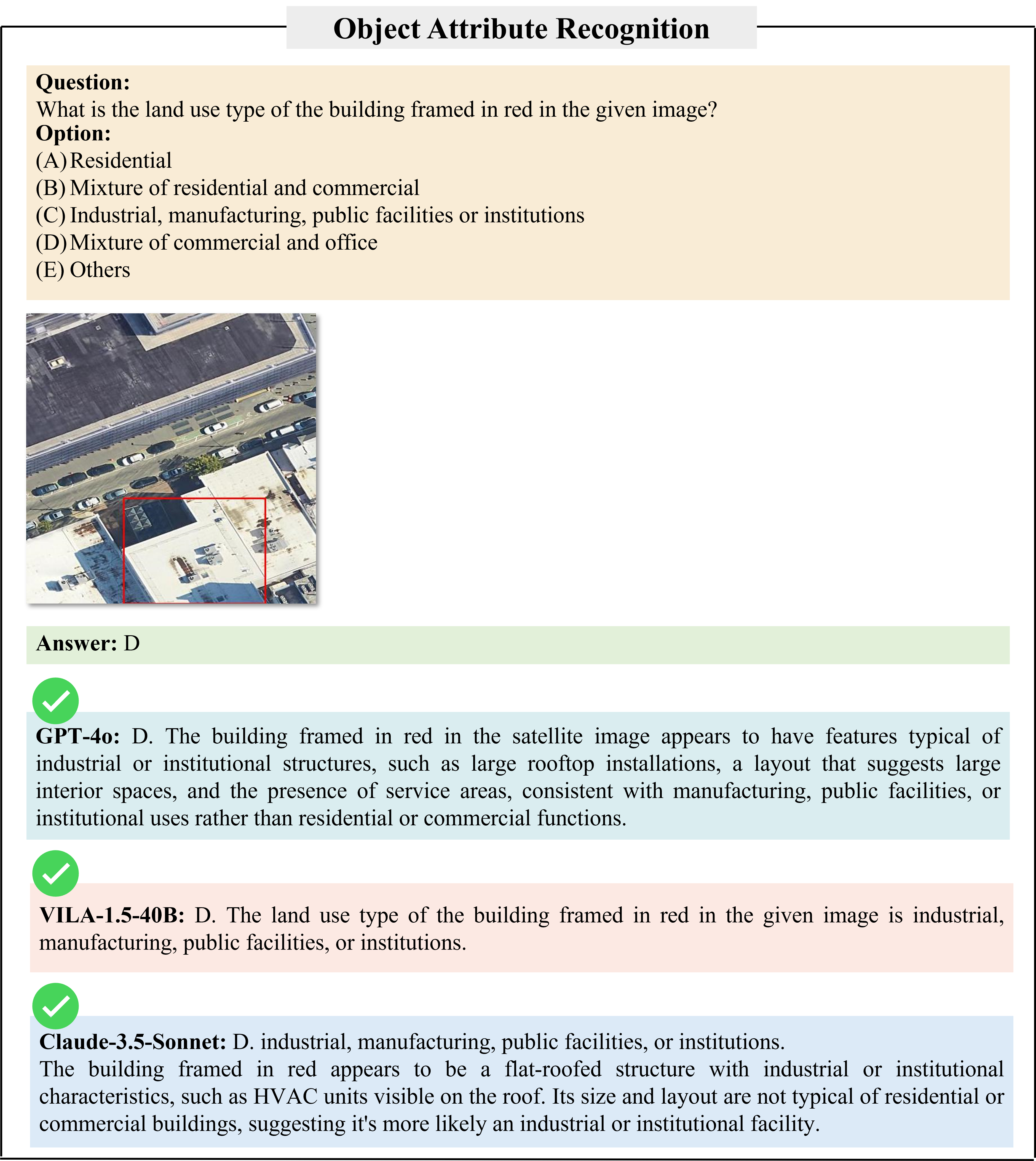

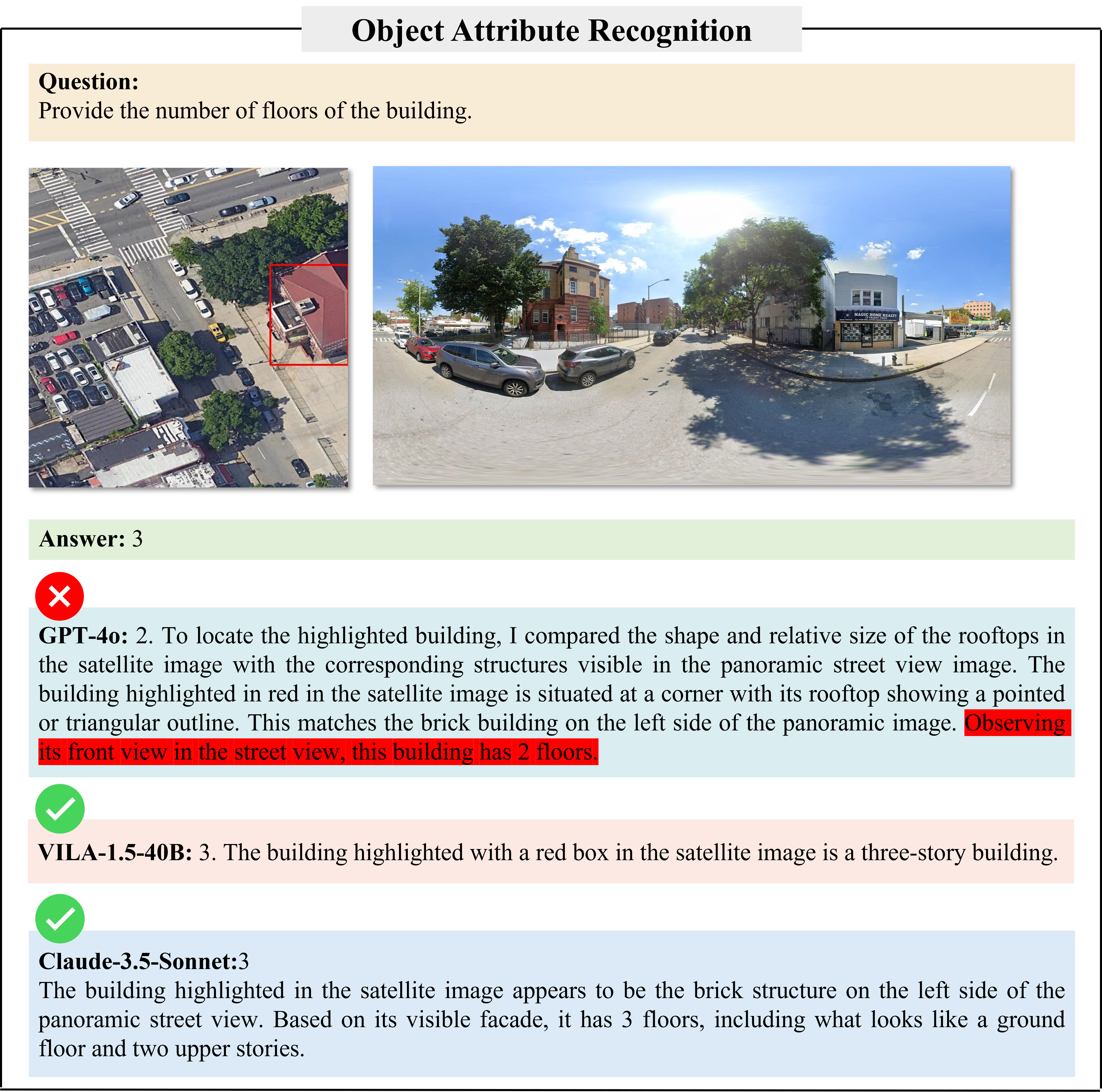

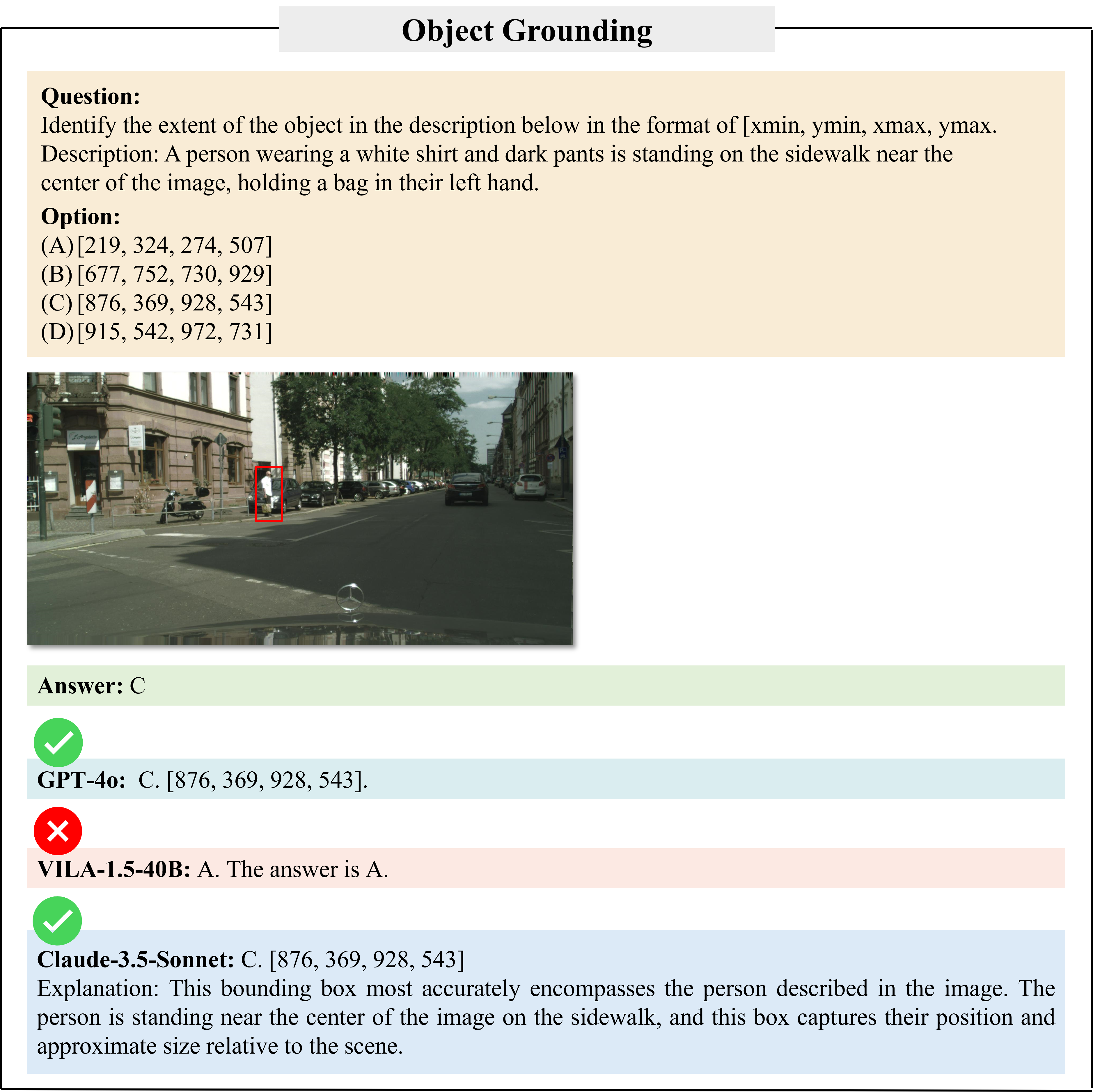

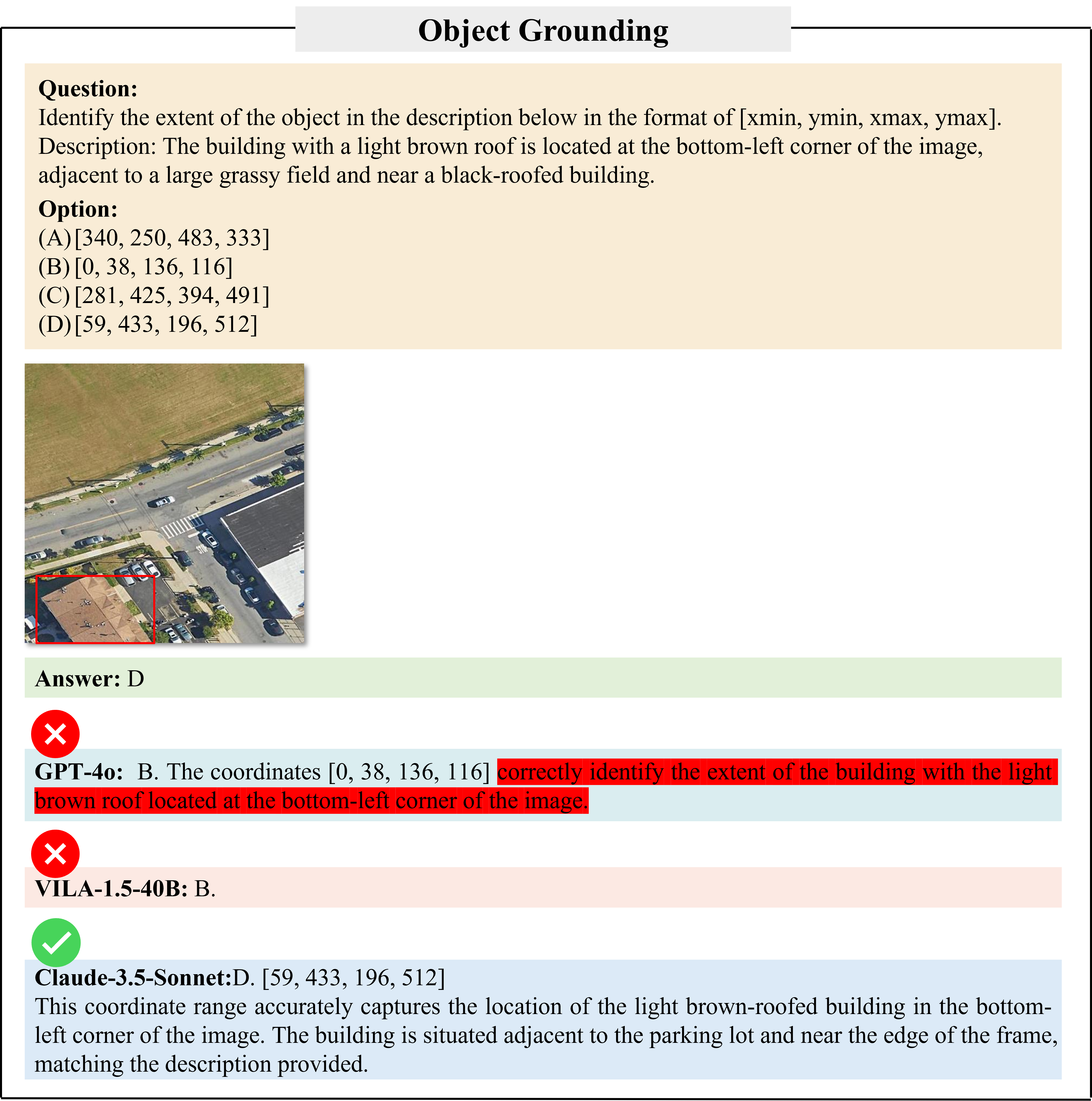

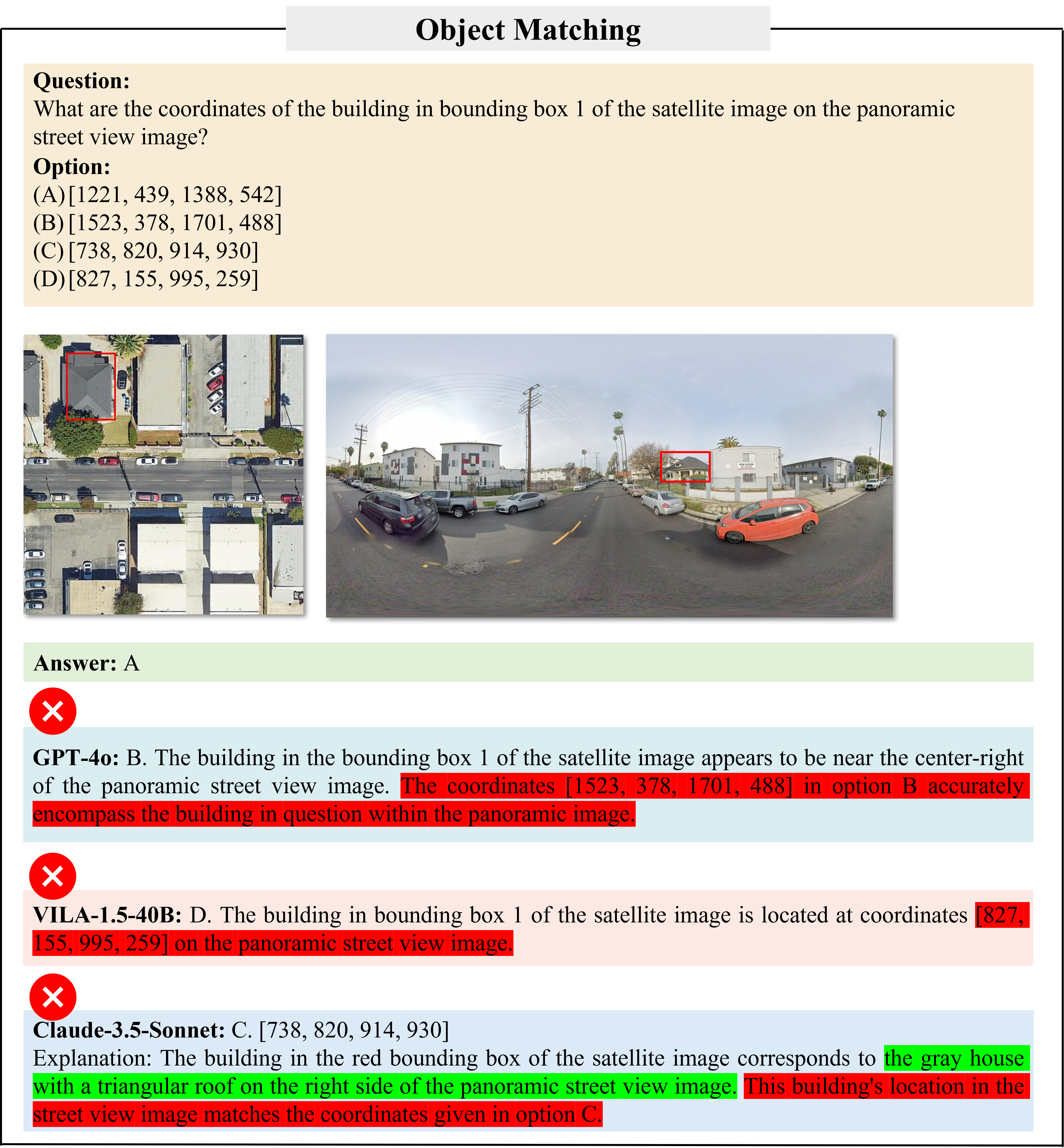

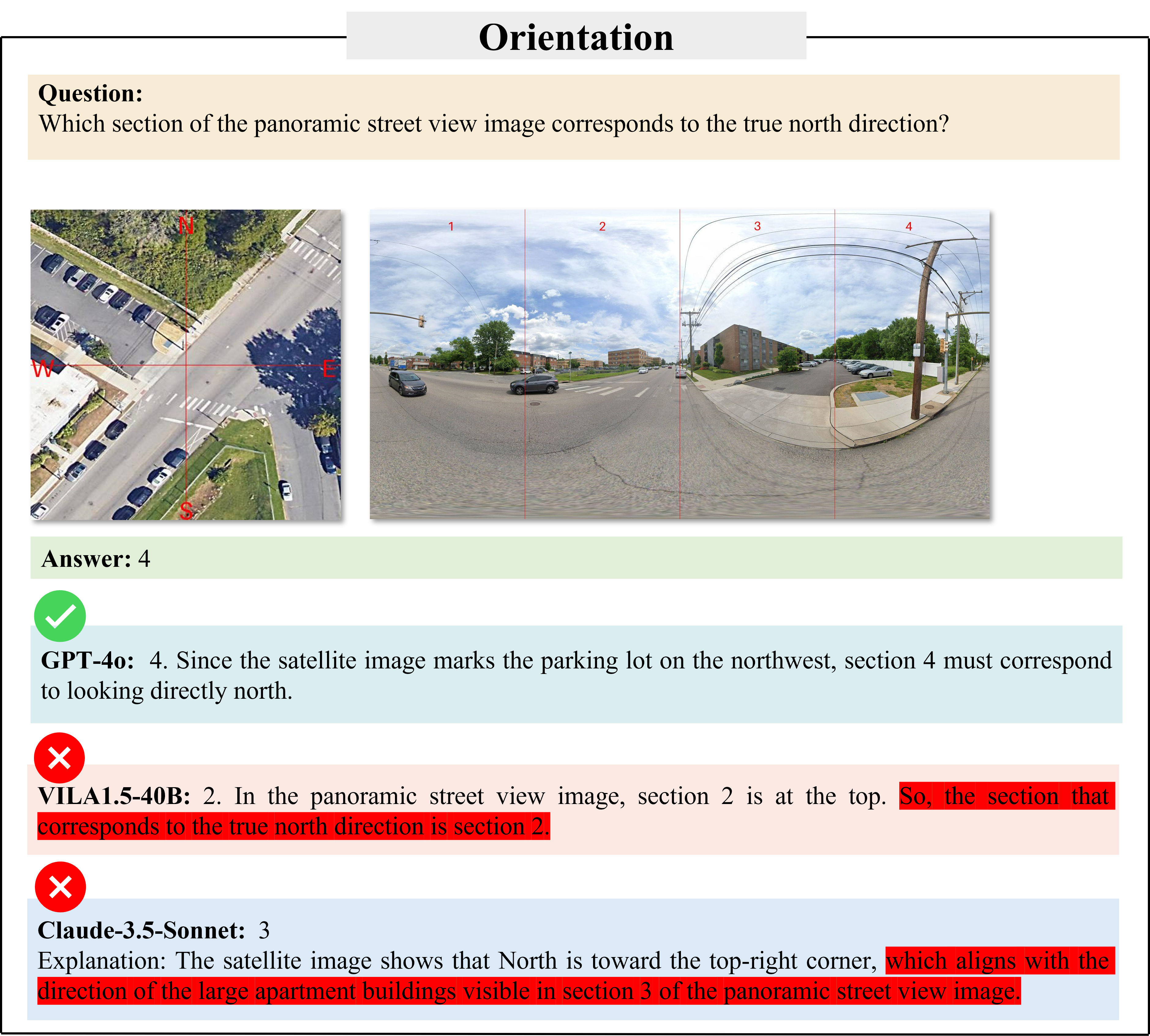

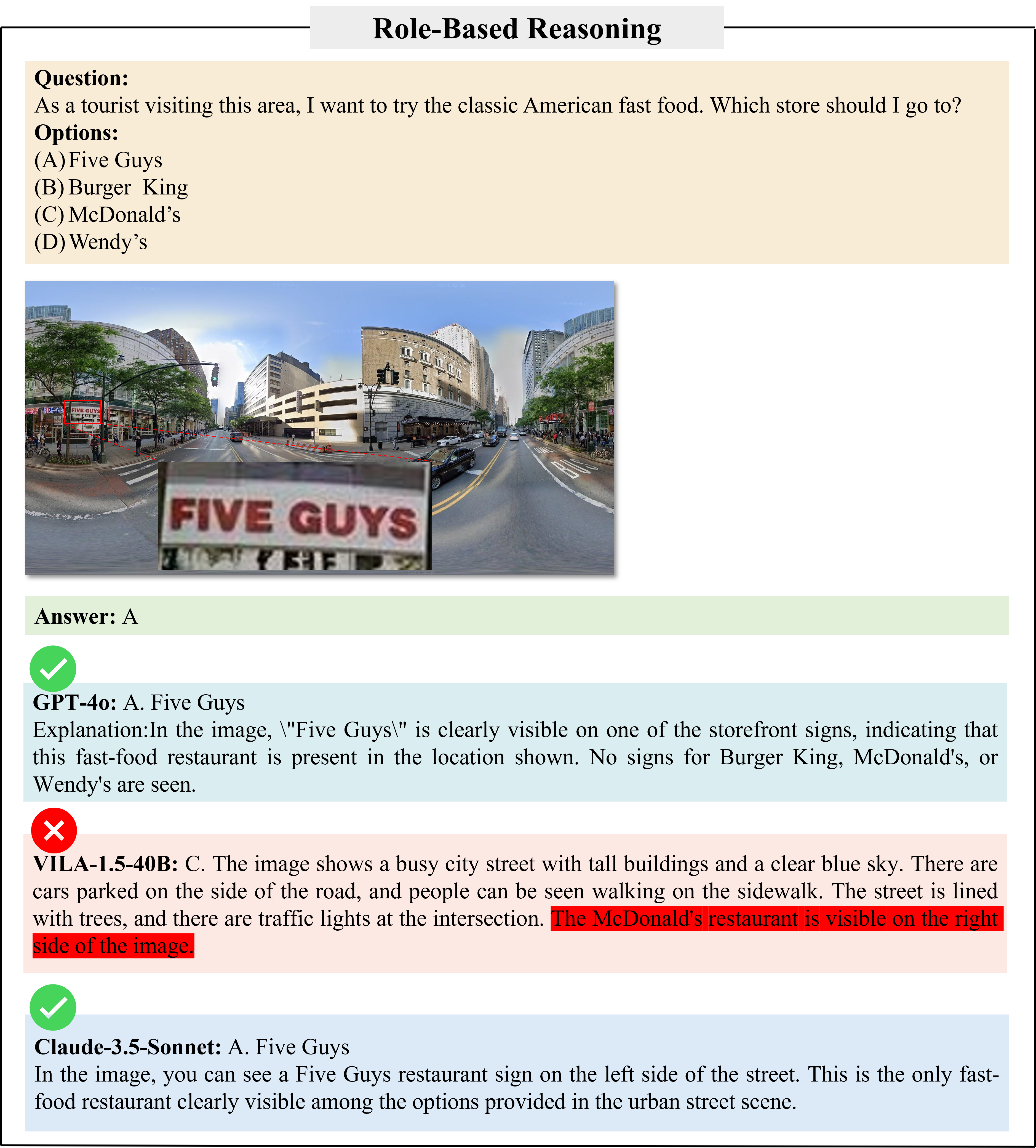

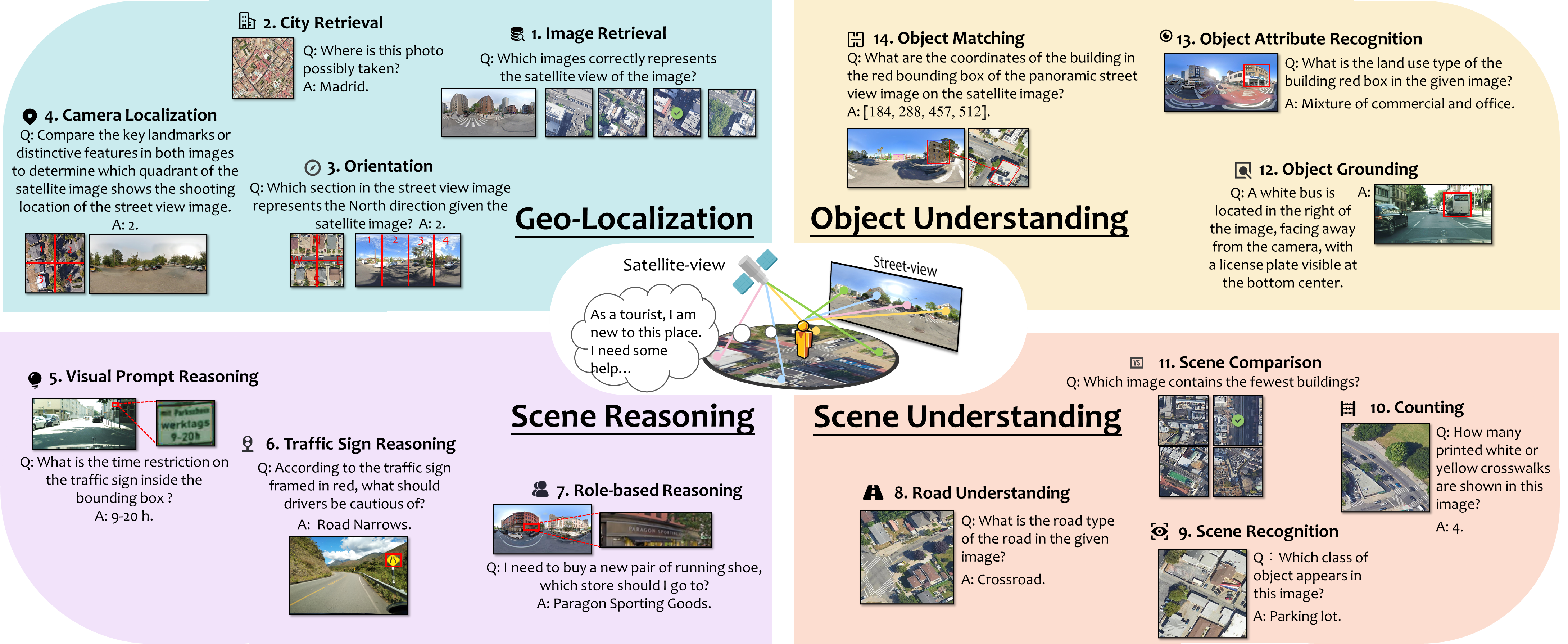

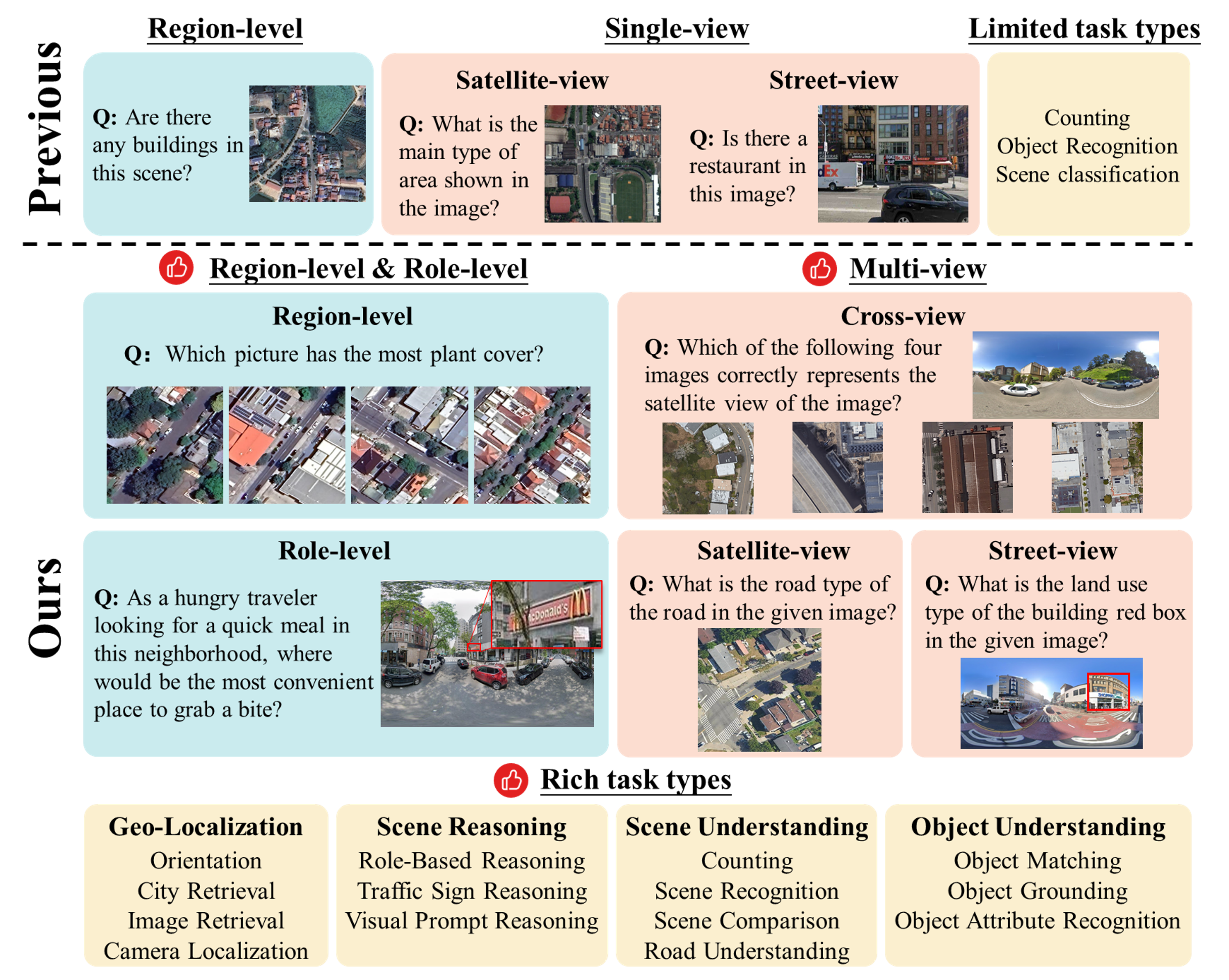

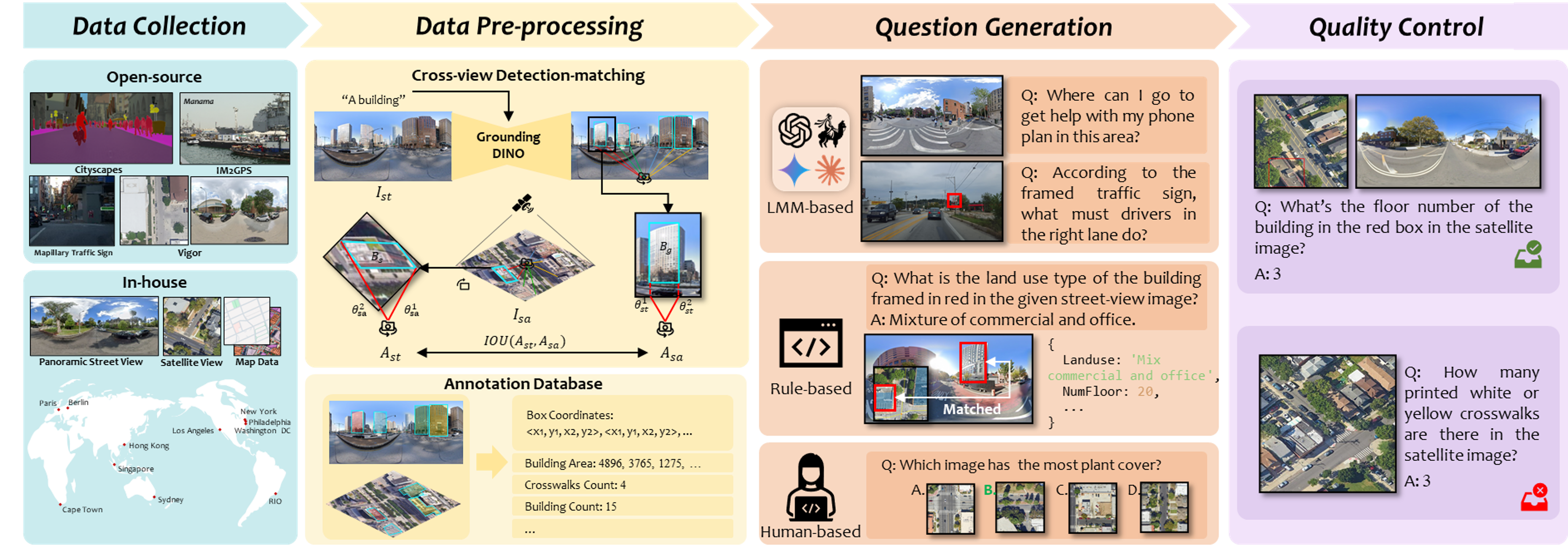

Recent evaluations of Large Multimodal Models (LMMs) have explored their capabilities in various domains, with only few benchmarks specifically focusing on urban environments. Moreover, existing urban benchmarks have been limited to evaluating LMMs with basic region-level urban tasks under singular views, leading to incomplete evaluations of LMMs' abilities in urban environments. To address these issues, we present UrBench, a comprehensive benchmark designed for evaluating LMMs in complex multi-view urban scenarios. UrBench contains 11.6K meticulously curated questions at both region-level and role-level that cover 4 task dimensions: Geo-Localization, Scene Reasoning, Scene Understanding, and Object Understanding, totaling 14 task types. In constructing UrBench, we utilize data from existing datasets and additionally collect data from 11 cities, creating new annotations using a cross-view detection-matching method. With these images and annotations, we then integrate LMM-based, rule-based, and human-based methods to construct large-scale high-quality questions. Our evaluations on 21 LMMs show that current LMMs struggle in the urban environments in several aspects. Even the best performing GPT-4o lags behind humans in most tasks, ranging from simple tasks such as counting to complex tasks such as orientation, localization and object attribute recognition, with an average performance gap of 17.4%. Our benchmark also reveals that LMMs exhibit inconsistent behaviors with different urban views, especially with respect to understanding cross-view relations. UrBench datasets and benchmark results will be publicly available.

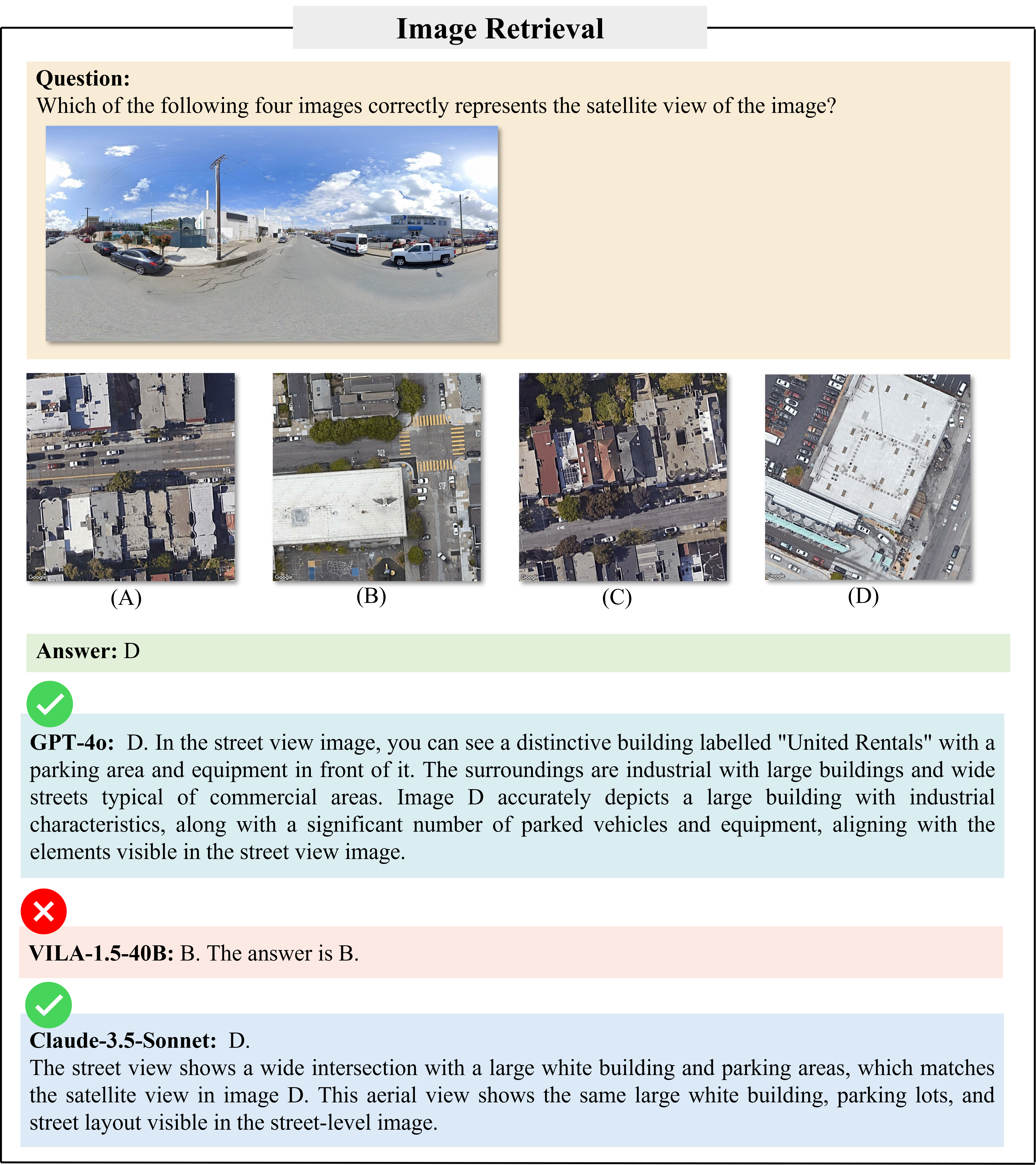

We introduce a novel benchmark curation pipeline that involves a cross-view detection-matching algorithm for object-level annotation generation and a question generation approach that integrates LMM-based, rule-based, and human-based methods. This pipeline ensures the creation of a large-scale and high-quality corpus of questions, significantly enhancing the diversity and depth of evaluation across multiple urban tasks.

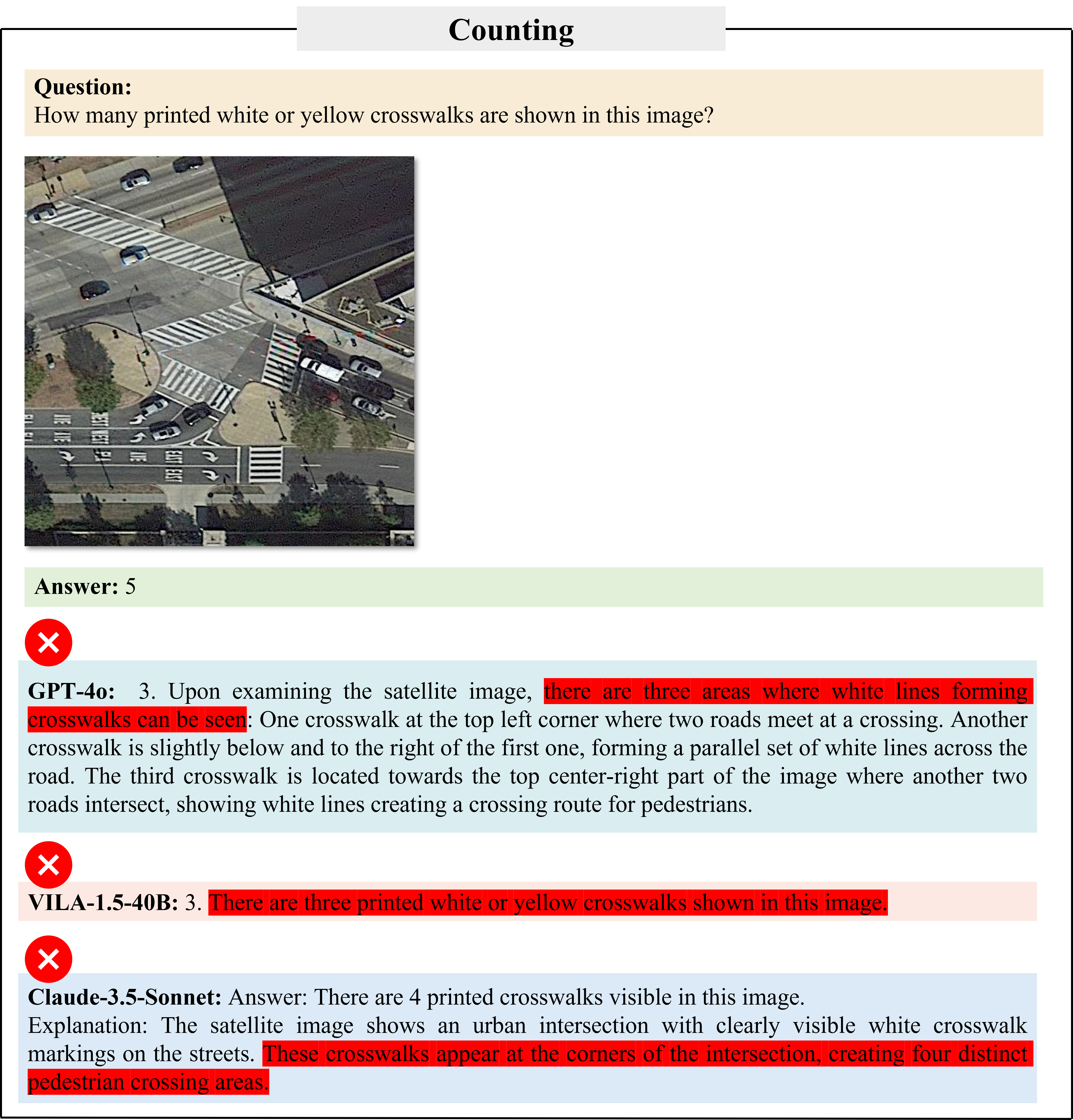

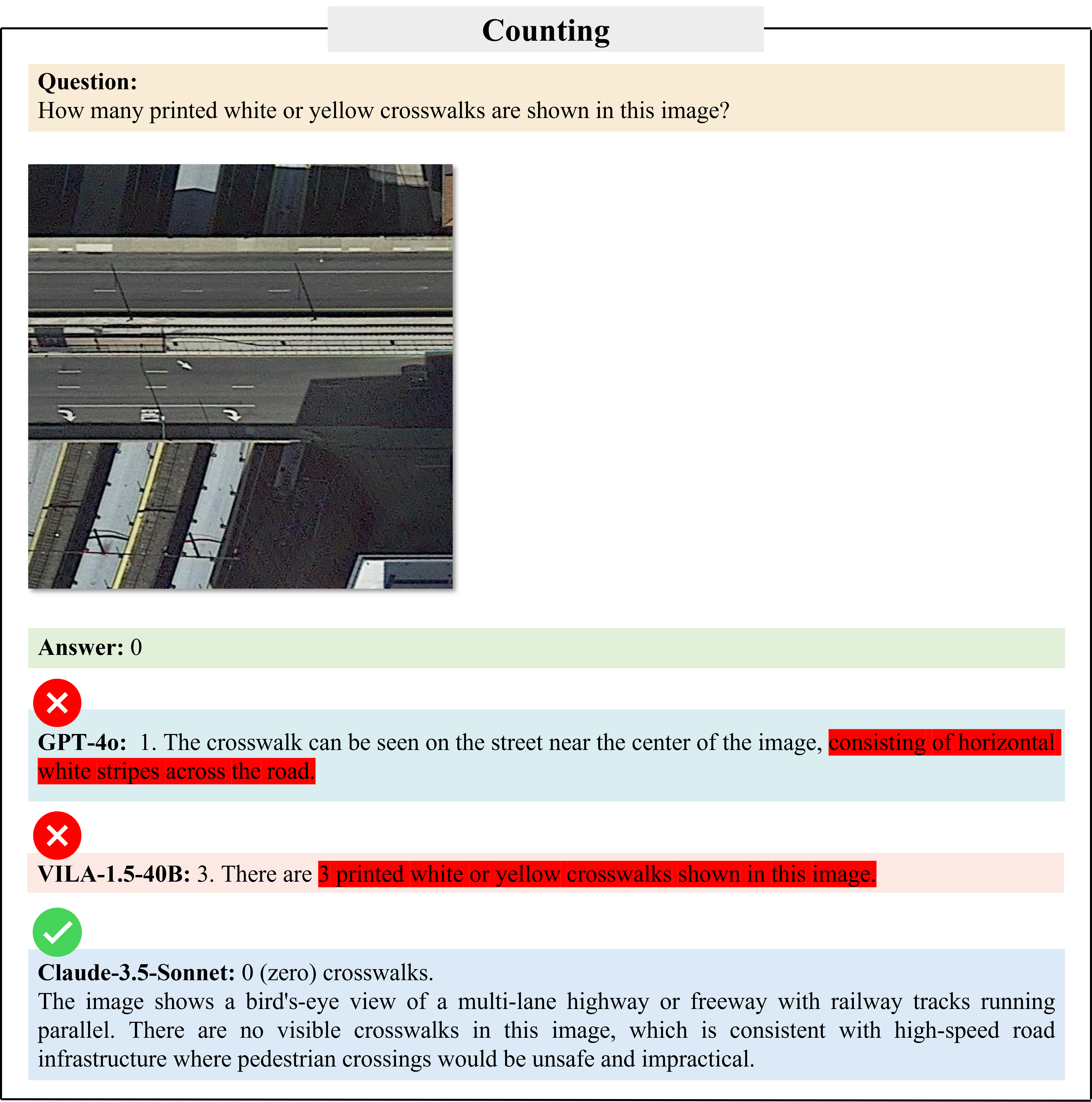

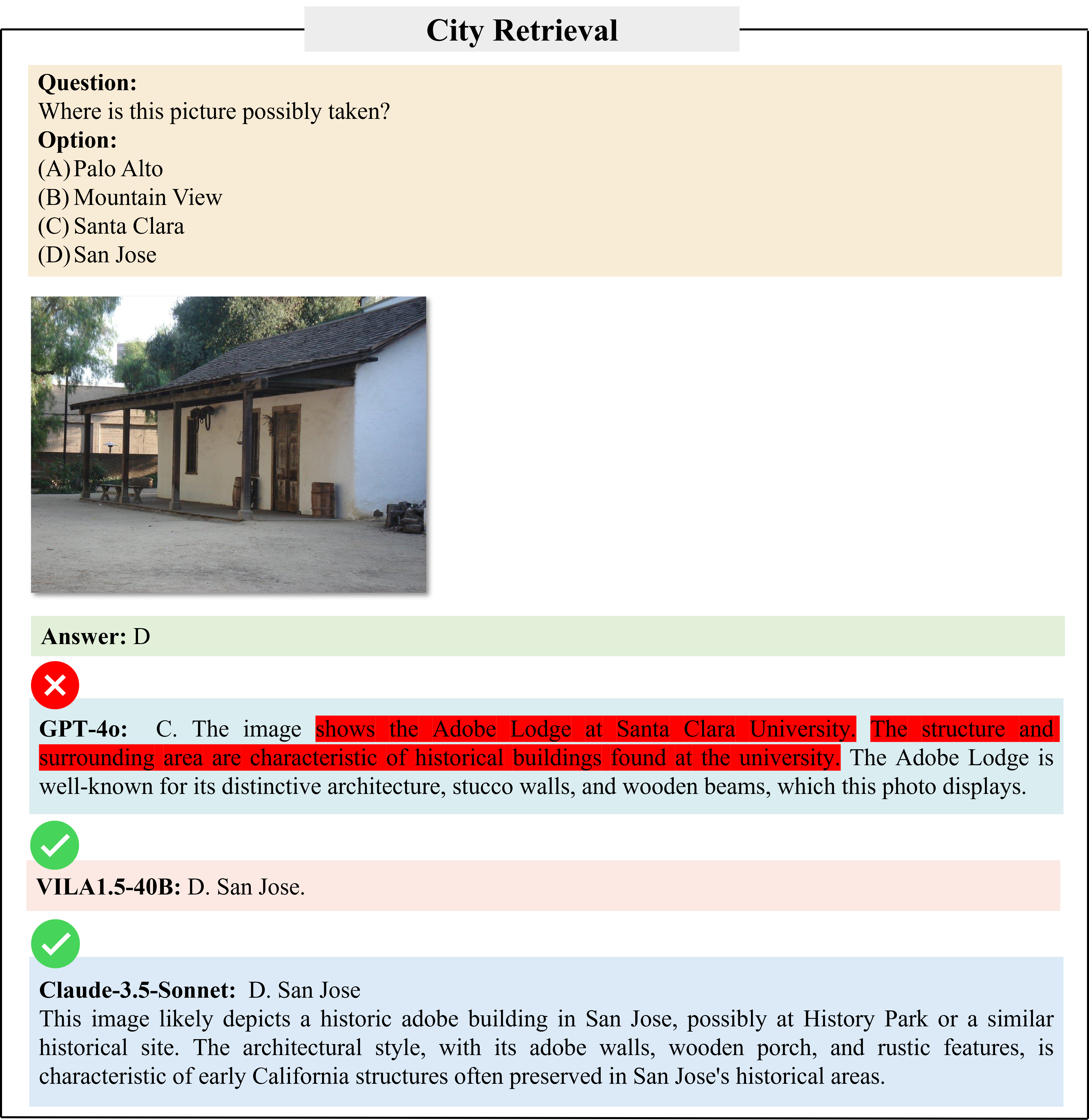

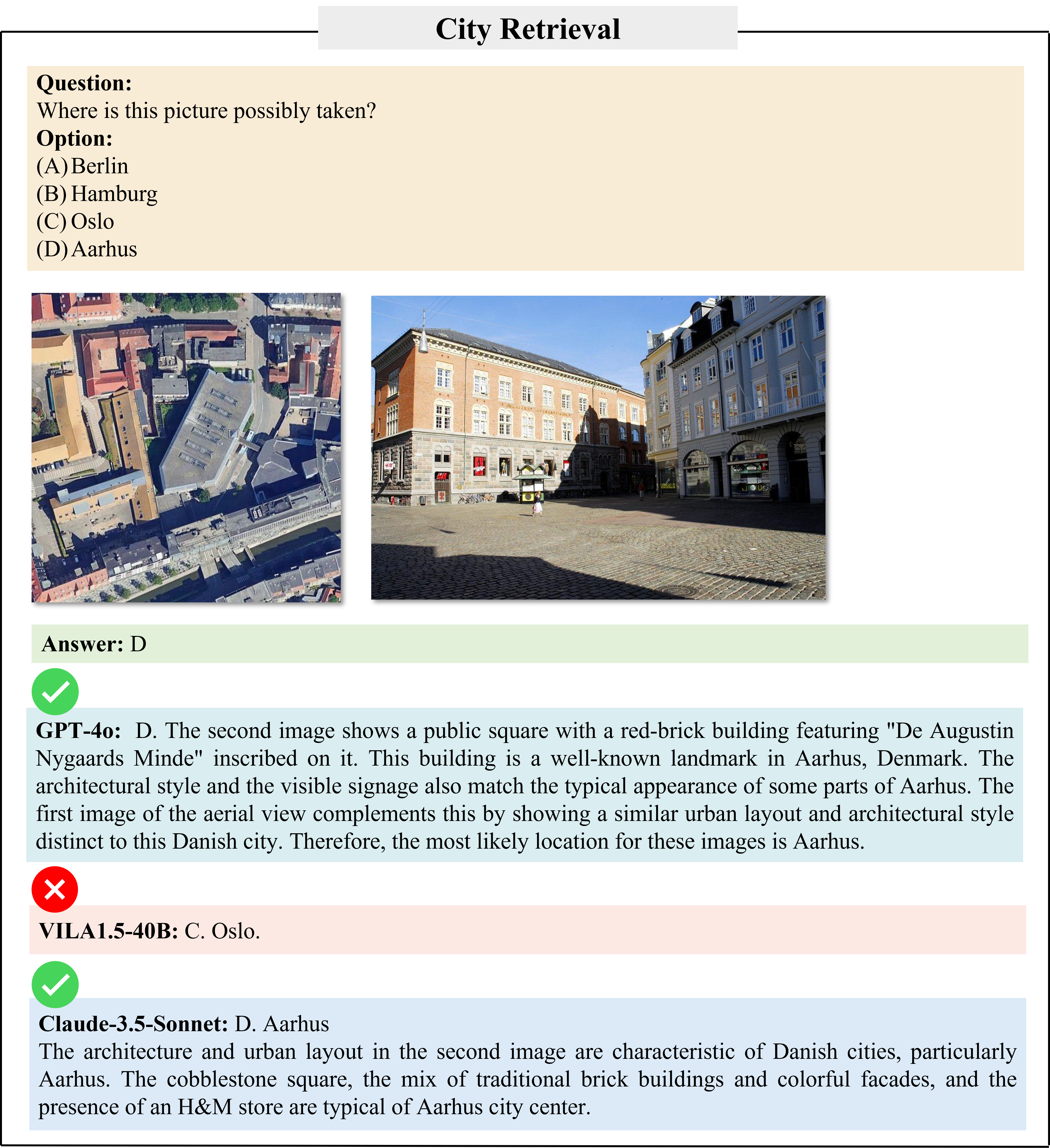

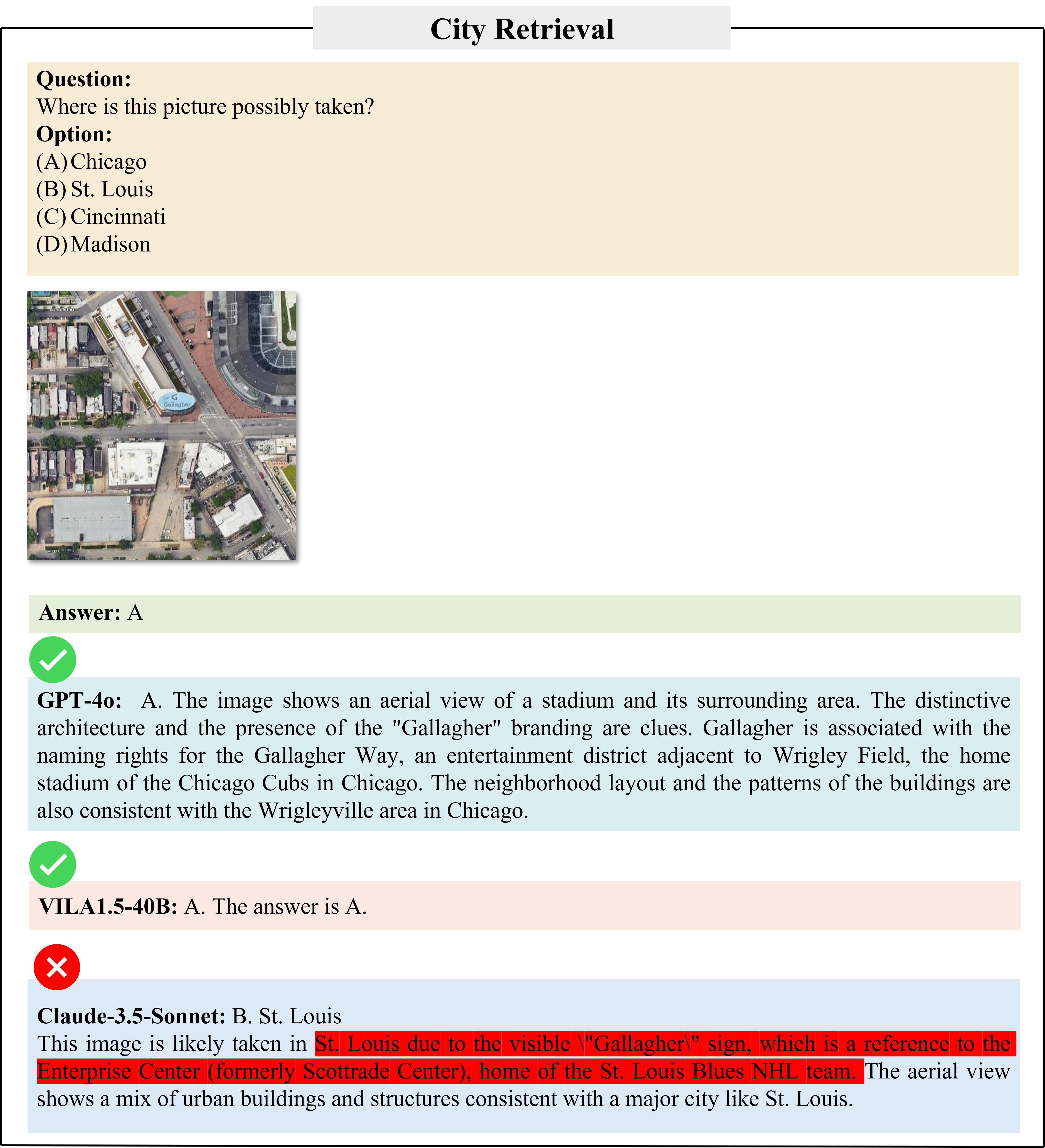

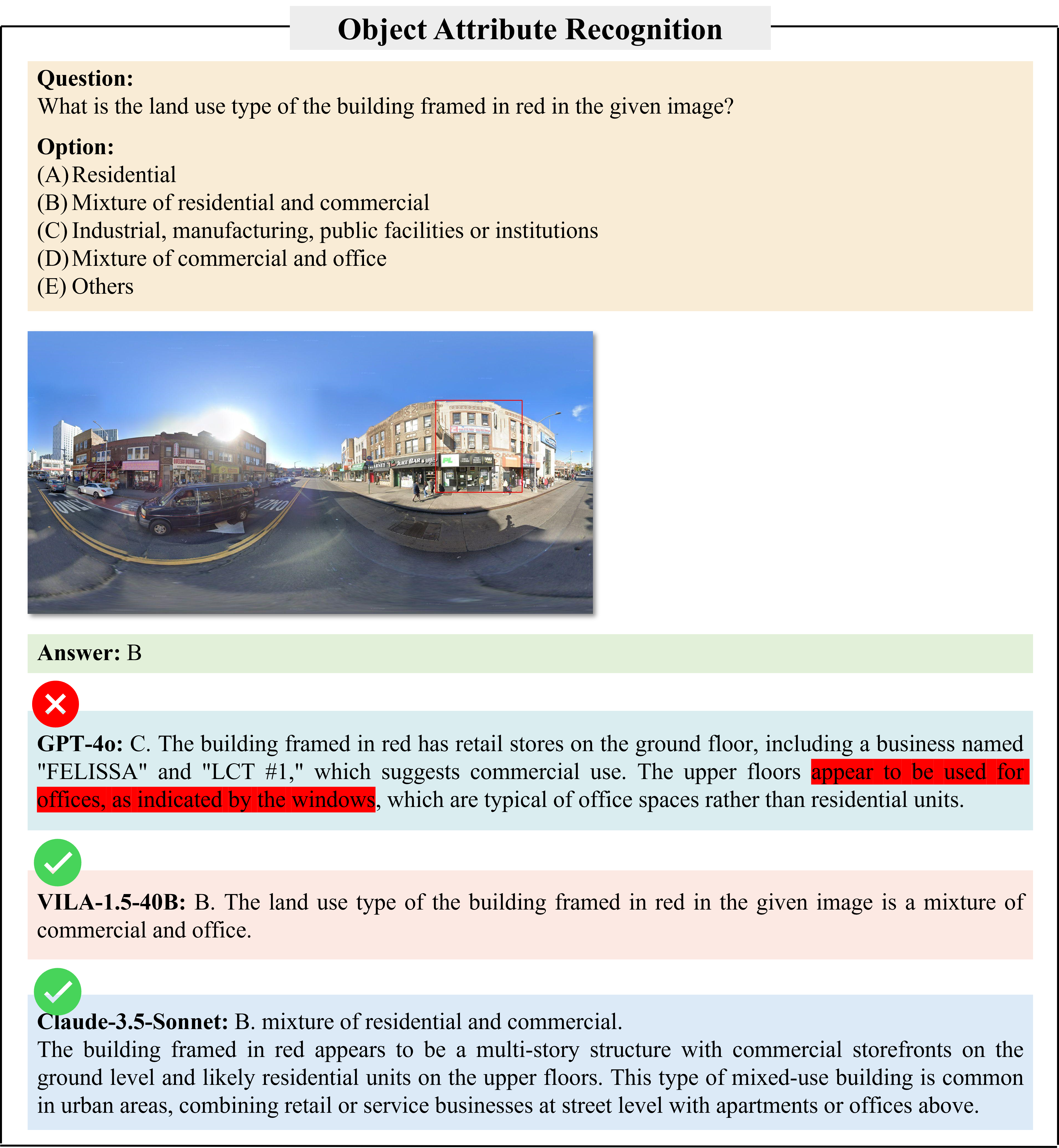

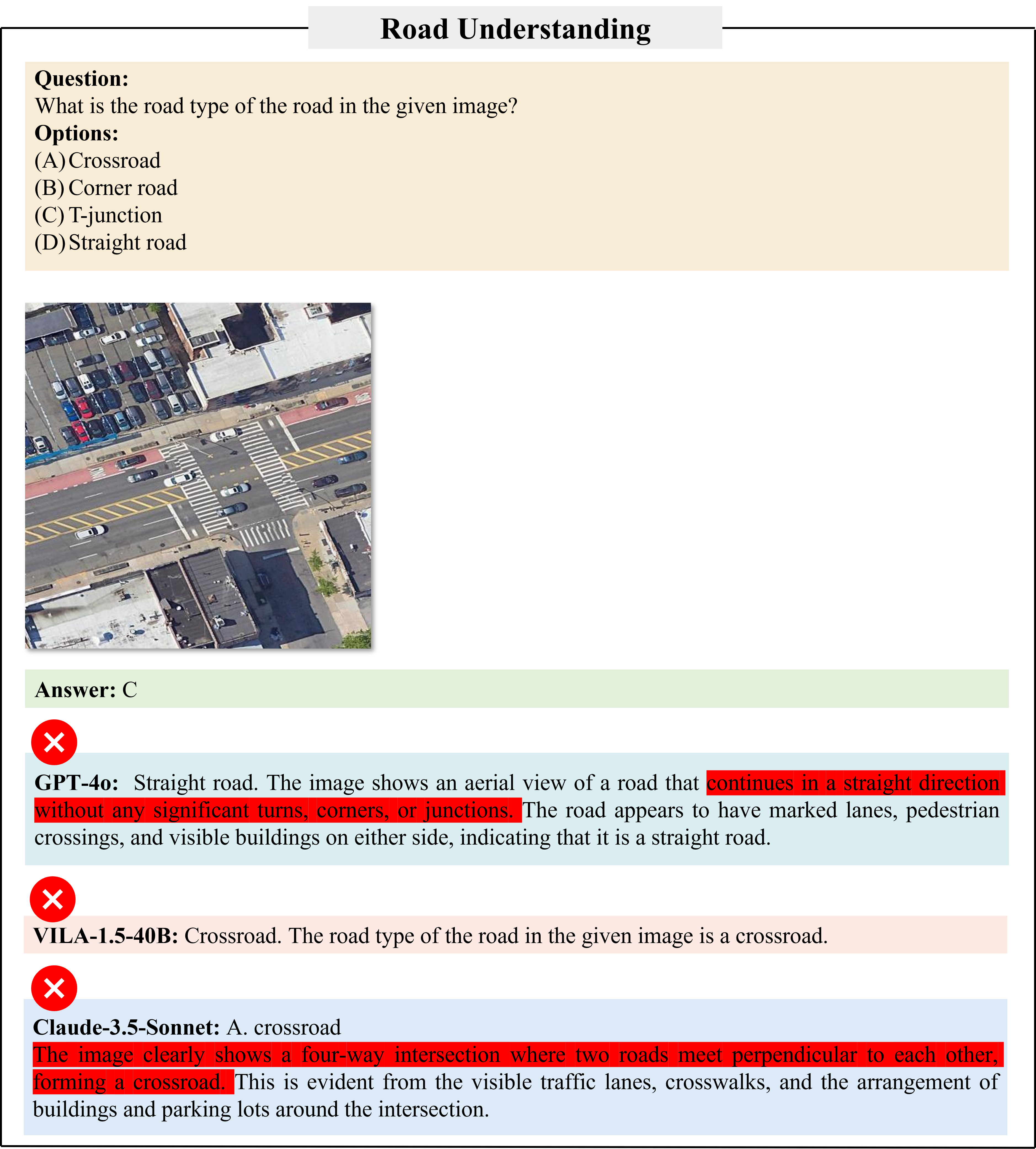

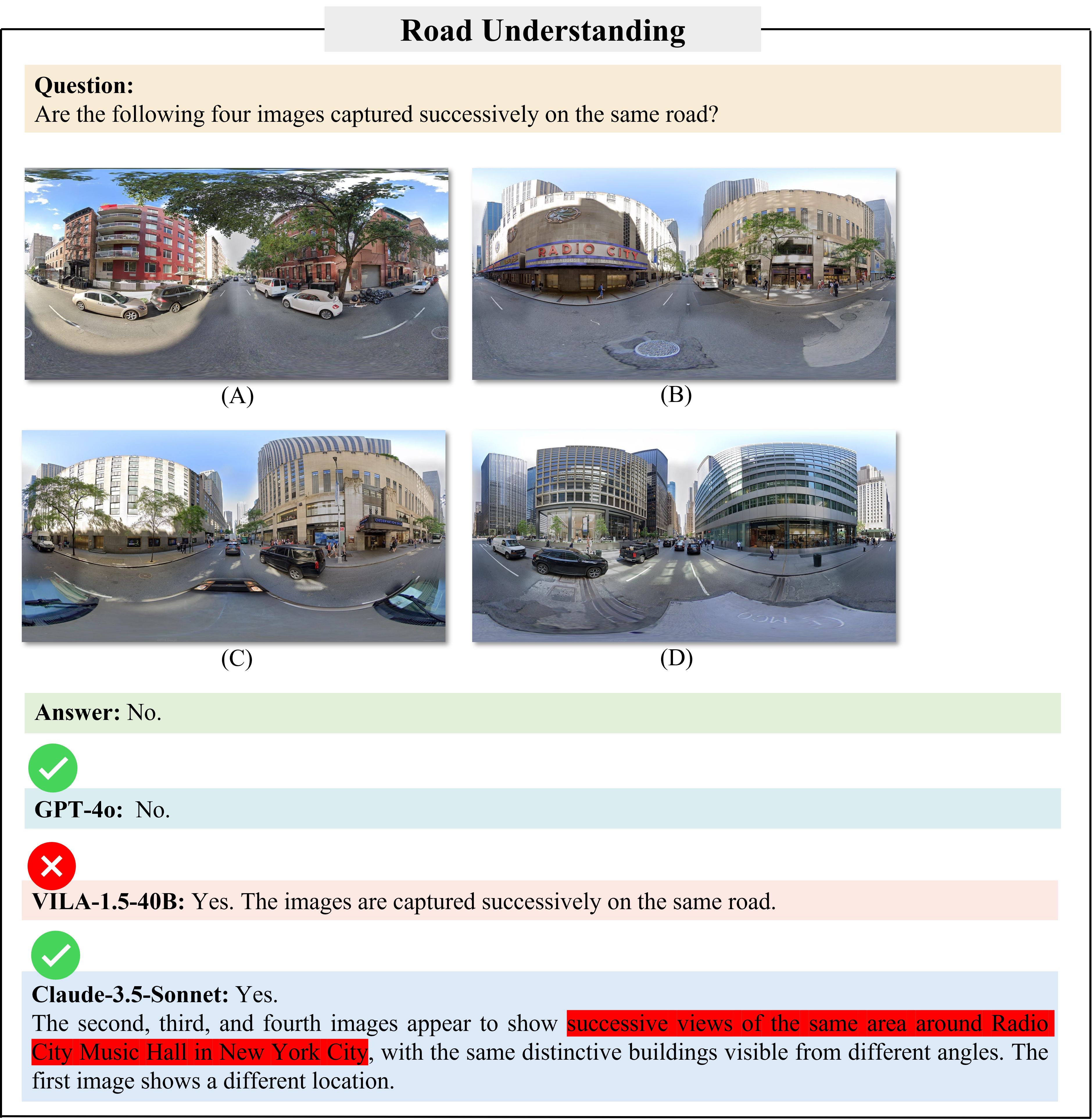

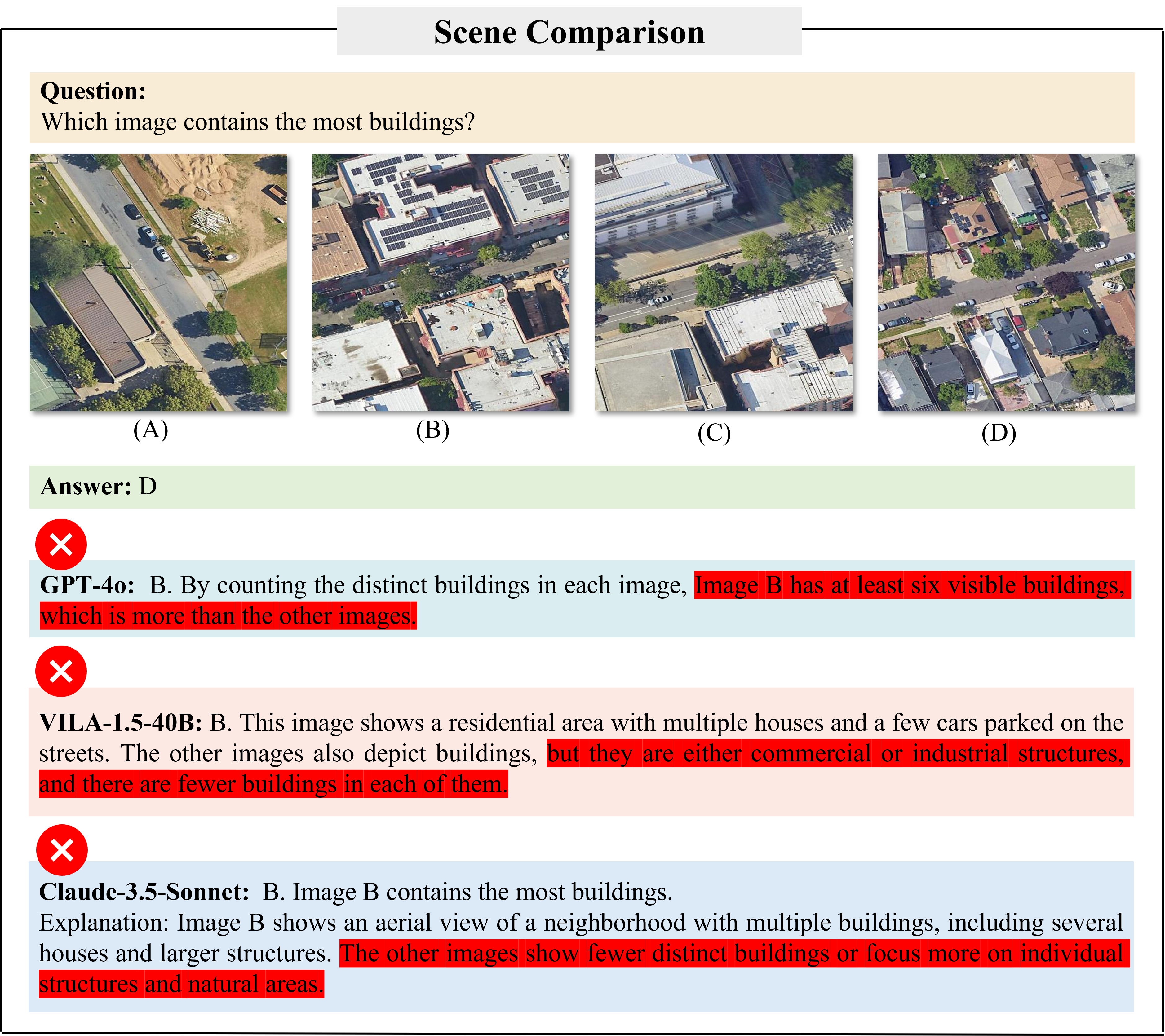

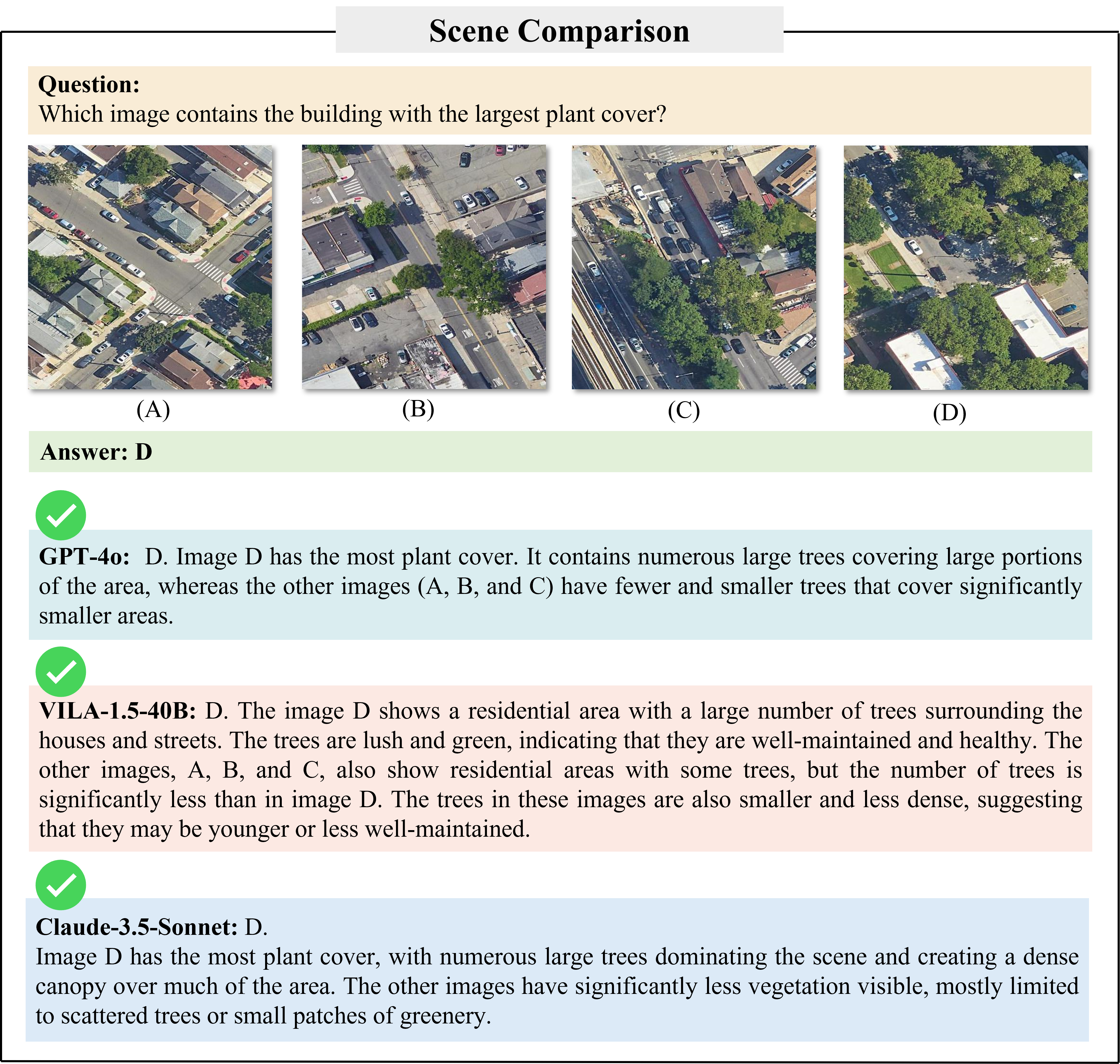

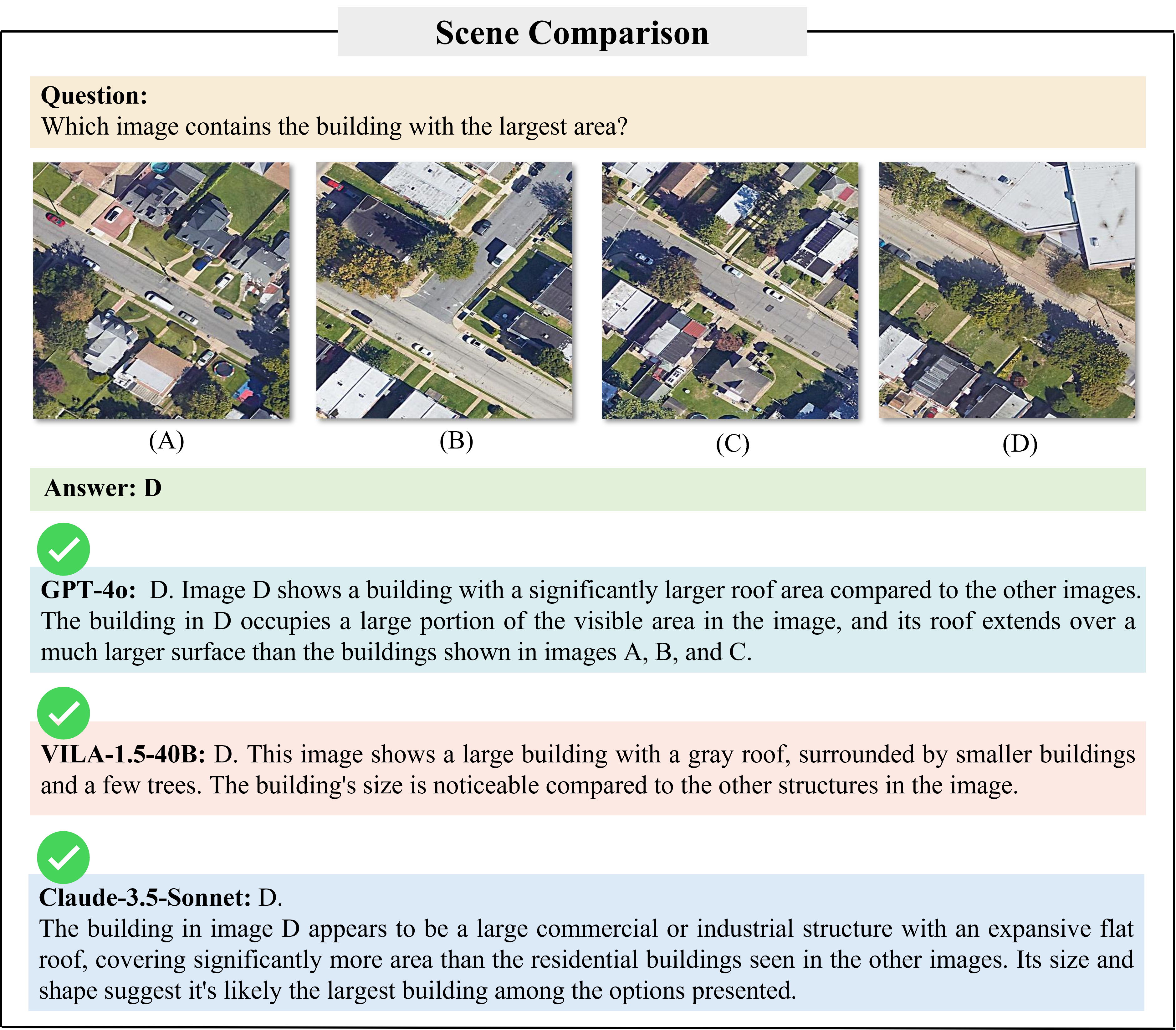

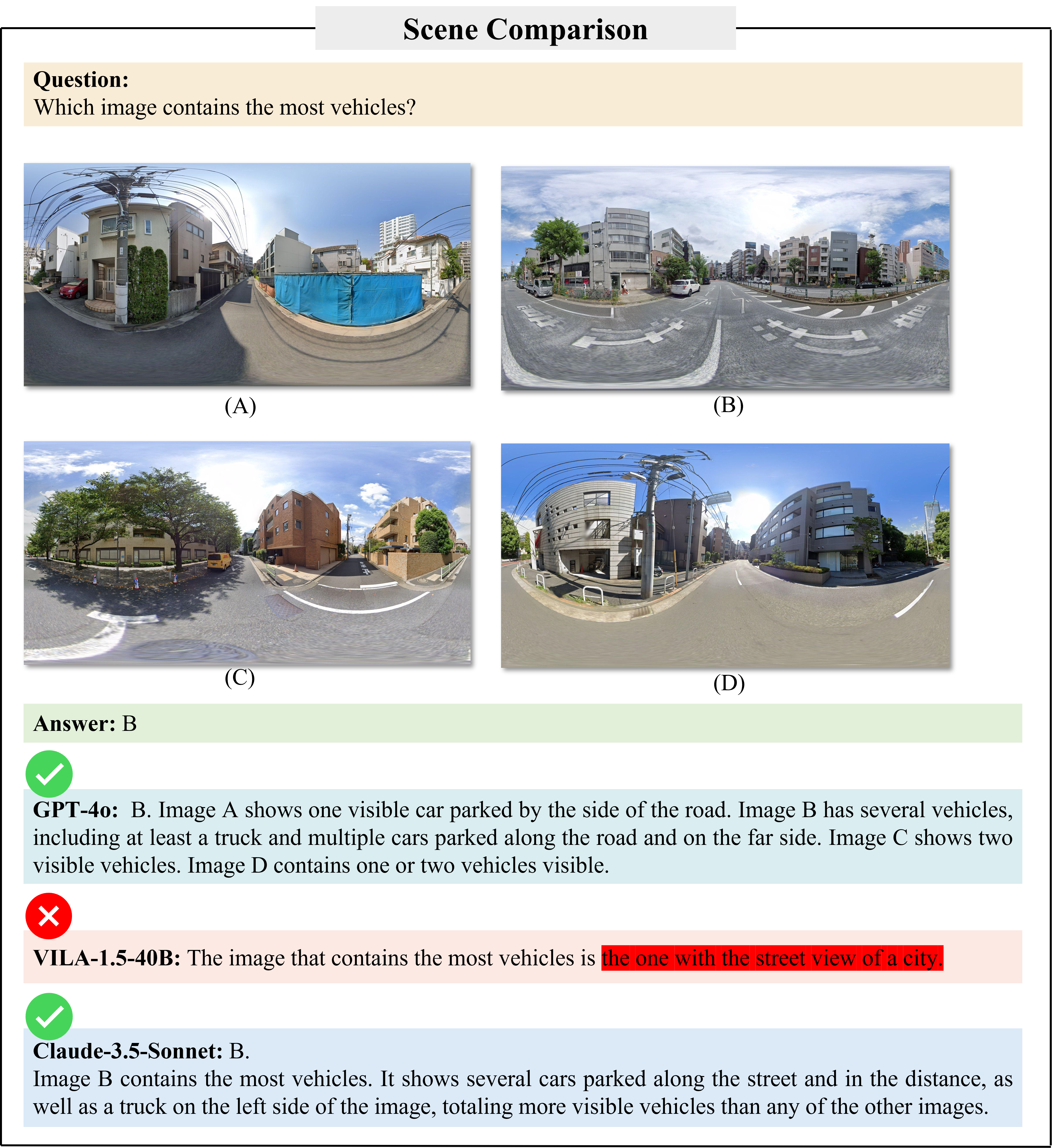

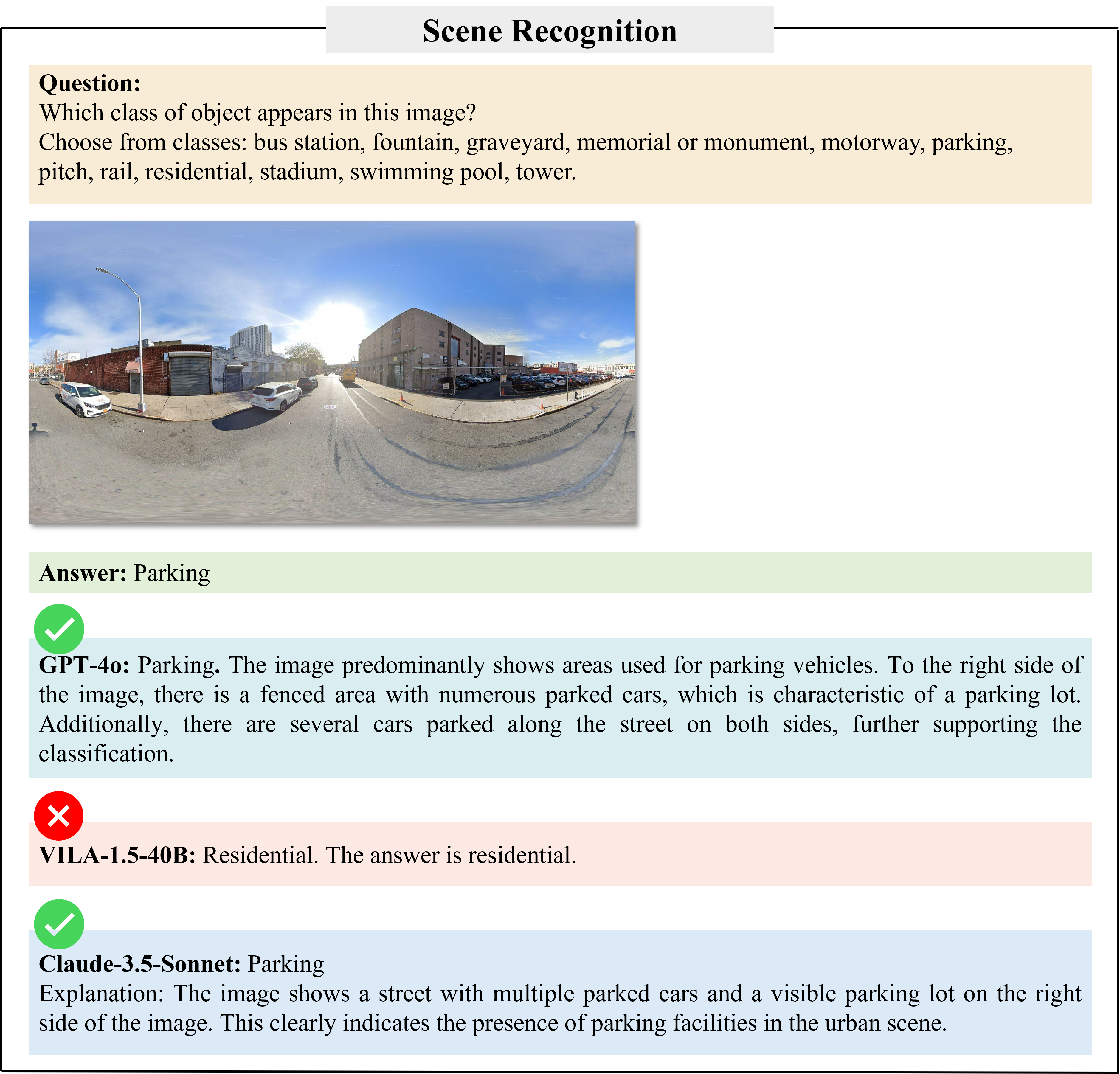

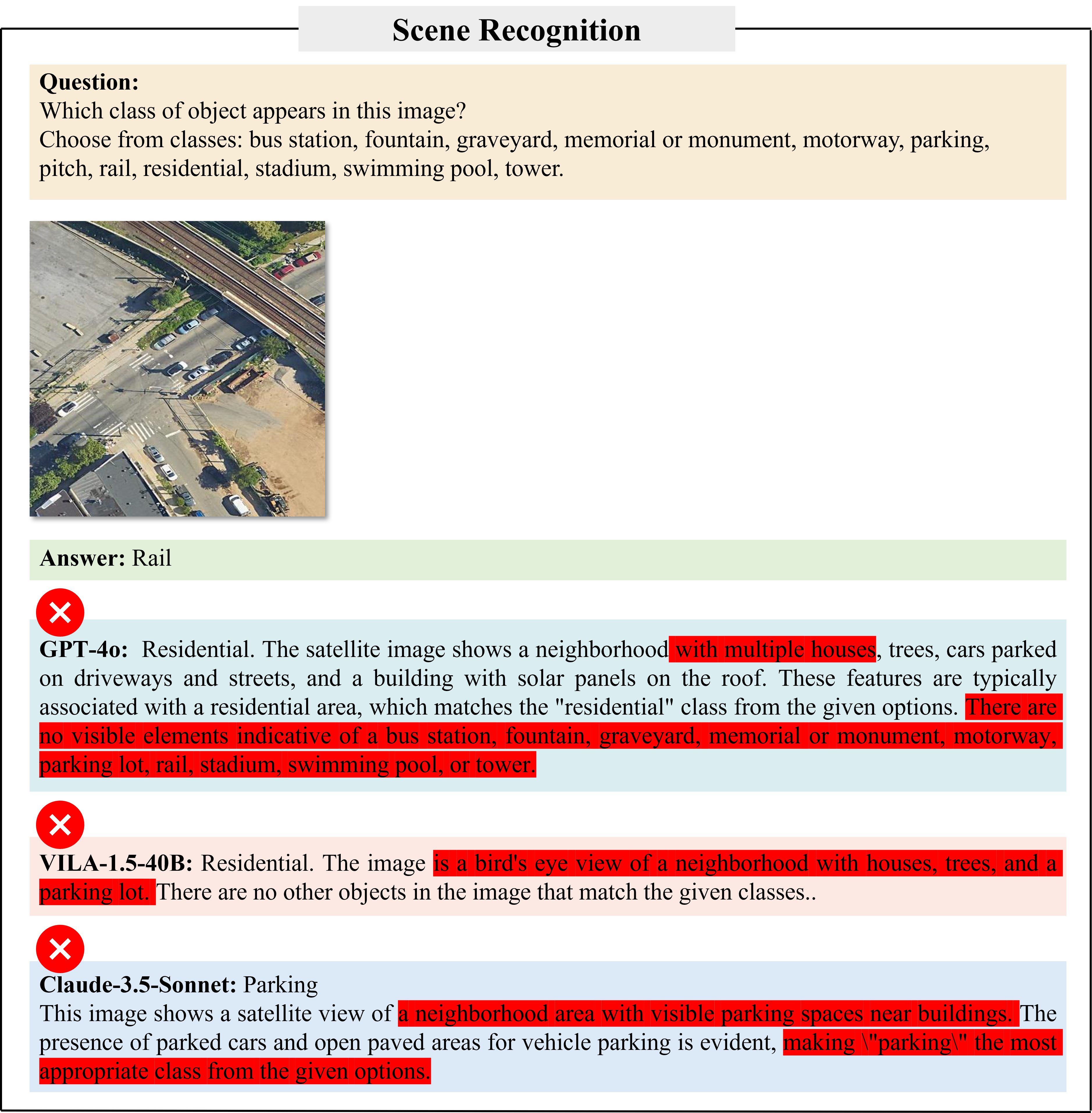

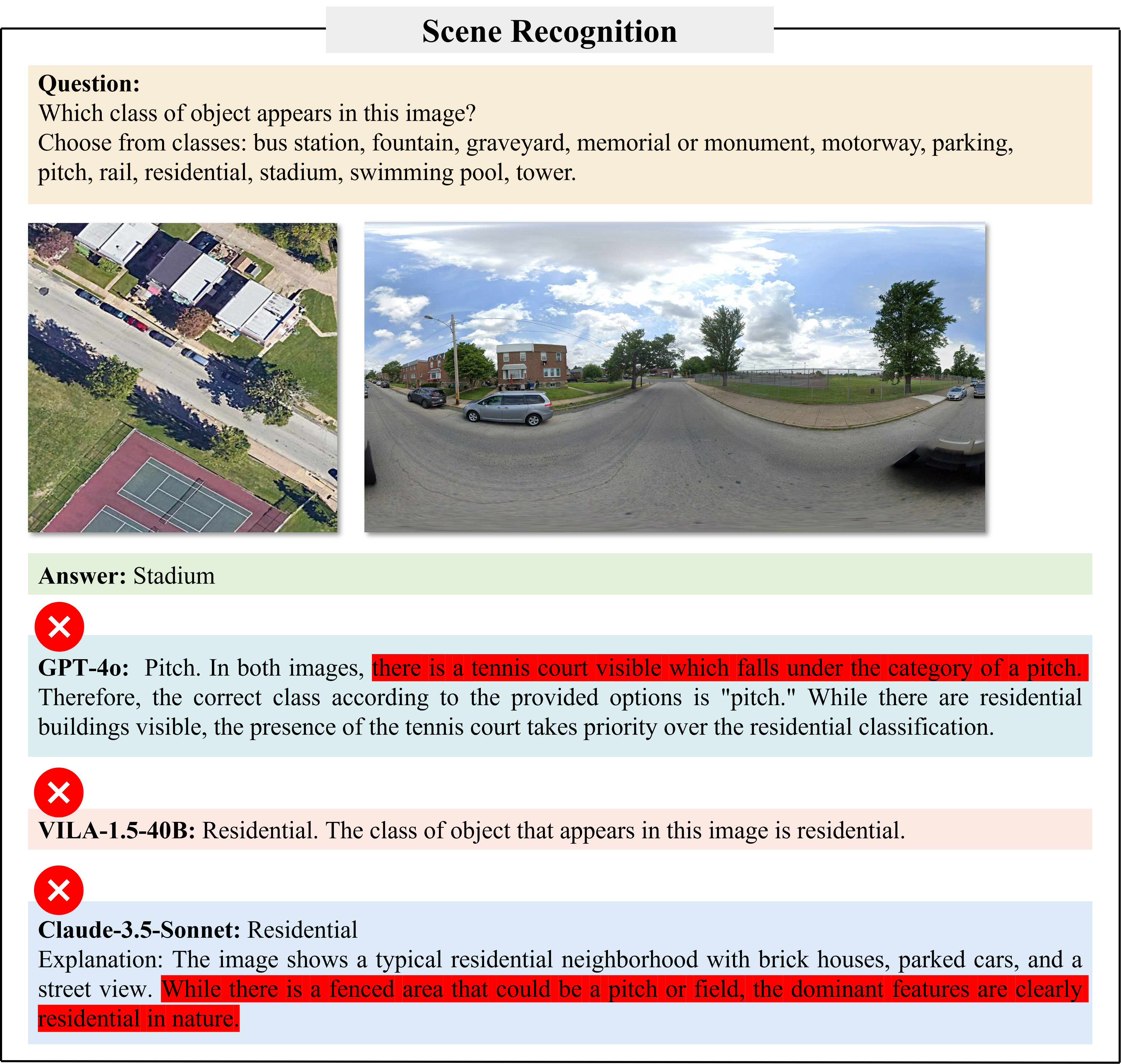

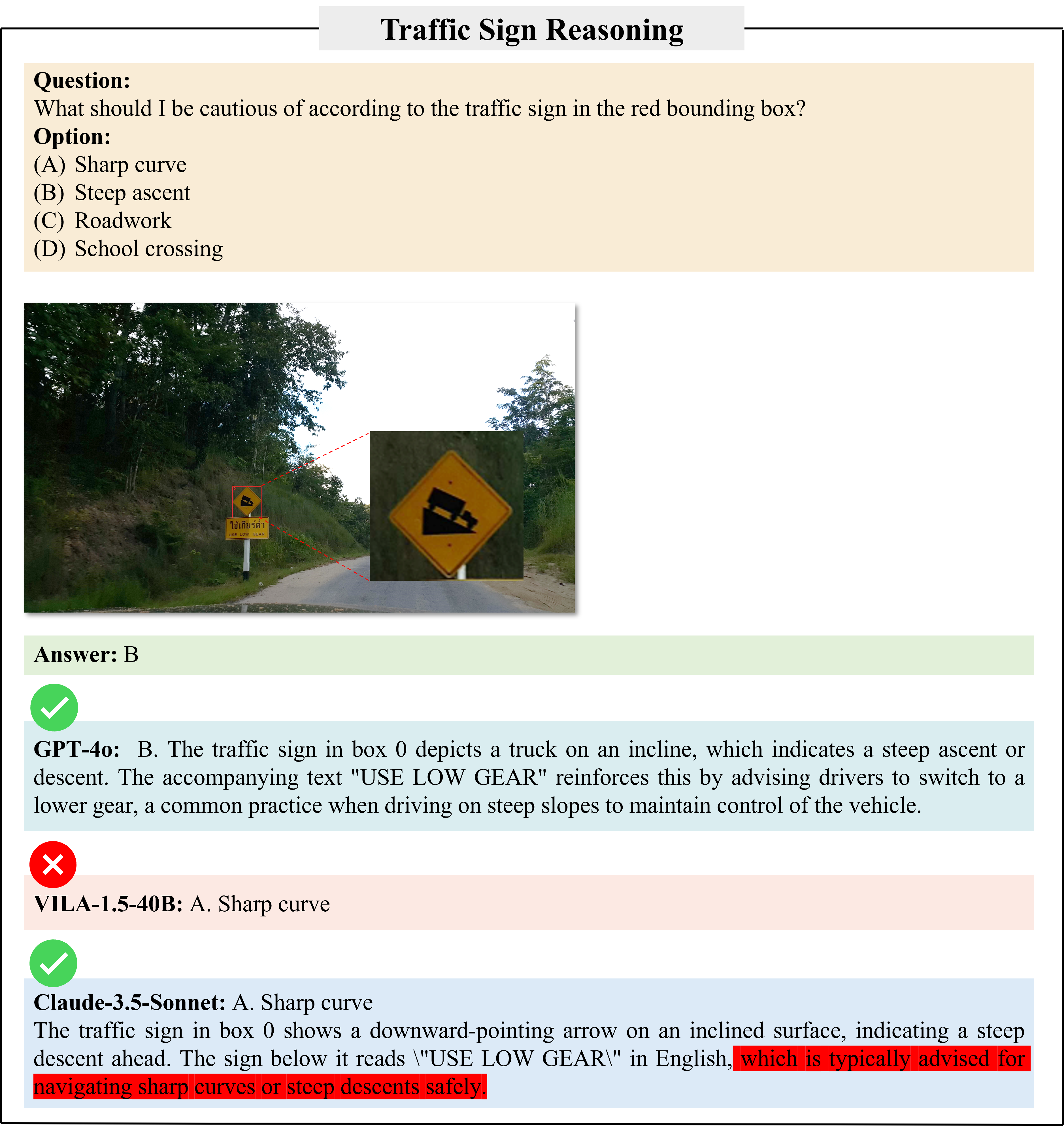

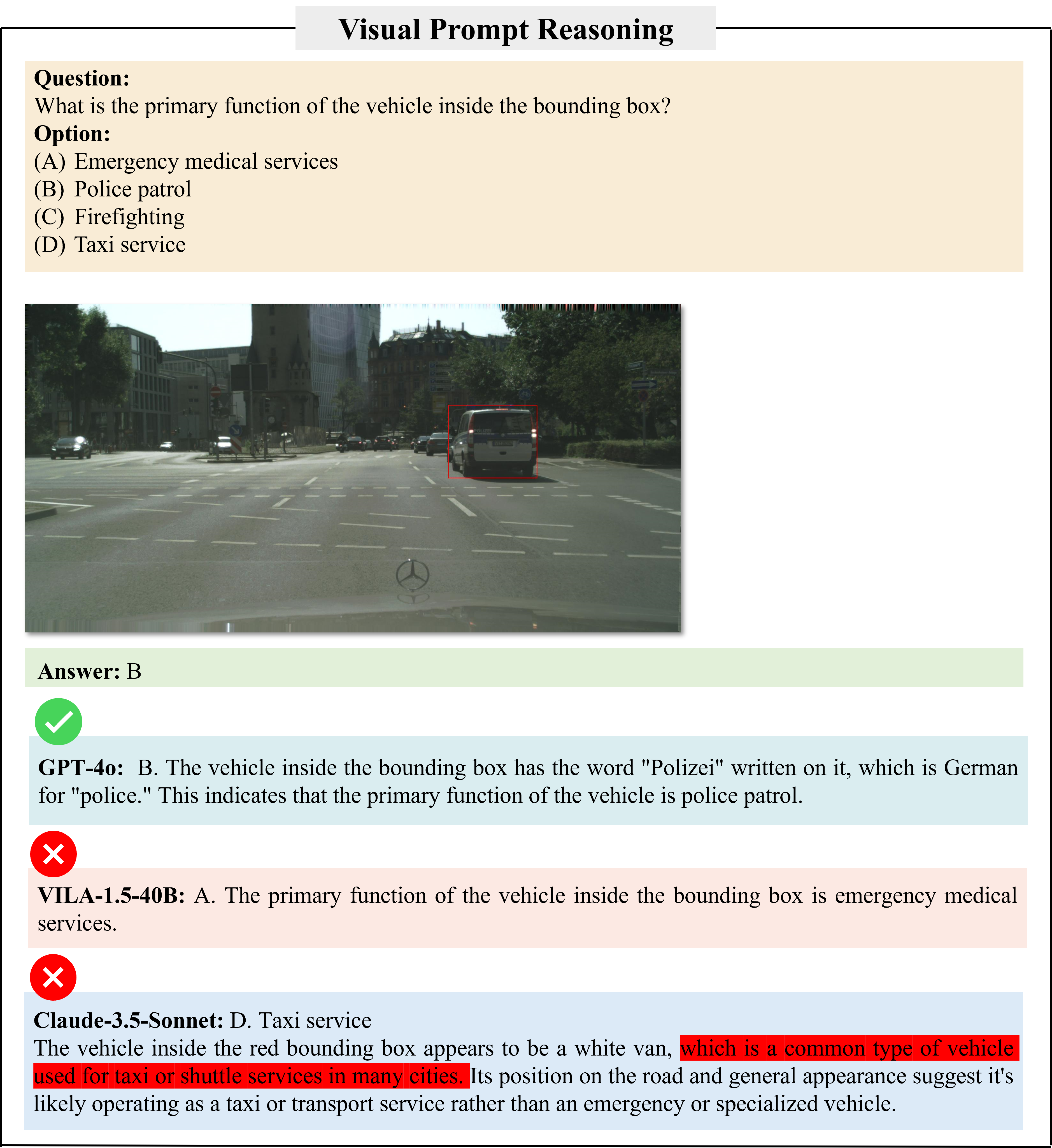

UrBench introduces 14 diverse tasks in the urban environment, covering multiple different views. While humans handle most tasks easily, we find LMMs still struggle.

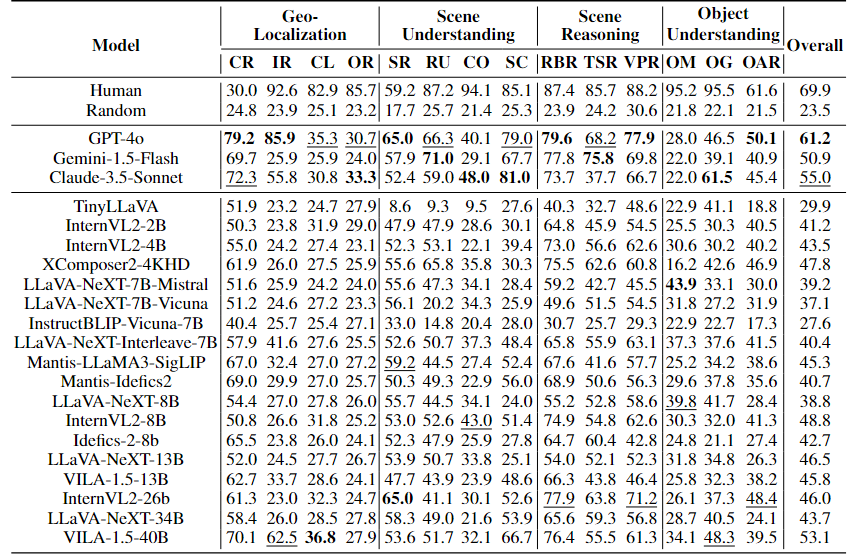

UrBench poses significant challenges to current SoTA LMMs. We find that the best performing closed-source model GPT-4o and open-source model VILA-1.5- 40B only achieve a 61.2% and a 53.1% accuracy, respectively. Interestingly, our findings indicate that the primary limitation of these models lies in their ability to comprehend UrBench questions, not in their capacity to process multiple images, as the performance between multi-image and their single-image counterparts shows little difference, such as LLaVA-NeXT-8B and LLaVA-NeXT-Interleave in the table. Overall, the challenging nature of our benchmark indicates that current LMMs’ strong performance on the general benchmarks are not generalized to the multi-view urban scenarios.